The post will walk you through the process of creating, loading and querying partitioned Hive Table via HDInsight.

Follow the steps here to create HDInsight Cluster and to install Windows Azure PowerShell.

Upload data to HDInsight Cluster

Download the file w3c_2MB.txt to your local drive, say to ‘C:\w3c_2MB.txt’

Since HDInsight uses the Azure Blob Storage as its distributed file storage (unlike non-could Hadoop clusters that use the default HDFS implementation that’s based on local file-system), you can choose your preferred tool to upload data. We will use Windows Azure PowerShell to upload the sample data.

Open MIcrosoft Azure PowerShell

Run the command below, you will be prompt to login to your Azure account:

Add-AzureAccount

Paste the following script into the Windows Azure PowerShell console window to run it.

$subscriptionName ="<your-subscription>" $storageAccountName = "<your-storage-name>" $containerName = "<your-cluster-name>" $fileName ="C:\w3c_2MB.txt" $blobName = "user/hdp/myexample/w3c_2MB.txt" # Get the storage account key Select-AzureSubscription $subscriptionName $storageaccountkey = get-azurestoragekey $storageAccountName | %{$_.Primary} # Create the storage context object $destContext = New-AzureStorageContext -StorageAccountName $storageAccountName -StorageAccountKey $storageaccountkey # Copy the file from local workstation to the Blob container Set-AzureStorageBlobContent -File $fileName -Container $containerName -Blob $blobName -context $destContext

Initialize Hive session in Windows Azure PowerShell

Since we are already using Windows Azure PowerShell, we will continue to use it to submit Hive jobs. Quite frankly, PowerShell is not the best tool for ad-hoc interaction with Hive. To debug Hive jobs, (as of now) it’s better to remote connect to the cluster and use the built in Hive CLI. Even for automation, it’s simpler to submit jobs via the .NET SDK. The Hive support in Windows Azure PowerShell might become handy if you already have automated PS script and you want to keep everything in that script.

Before you start running Hive queries via Windows Azure PowerShell (using the Invoke-Hive cmdlet), you need to select your cluster. Paste the following script into the Windows Azure PowerShell console window to run it.

$subscriptionName ="<your-subscription>" $clusterName = "<your-cluster-name>" # Select the cluster Select-AzureSubscription $subscriptionName Use-AzureHDInsightCluster $clusterName

Create Non-Partitioned Hive Table

The script bellow will create a new Hive table name ‘w3c’ and load it with the date that we uploaded to the blob in the previous section

You should know that operations like create/delete execute faster when using New-AzureHDInsightHiveJobDefinition and Start-AzureHDInsightJob instead of Invoke-Hive (more details here). I choose to use Invoke-Hive for all the hive queries in this post to keep things simple.

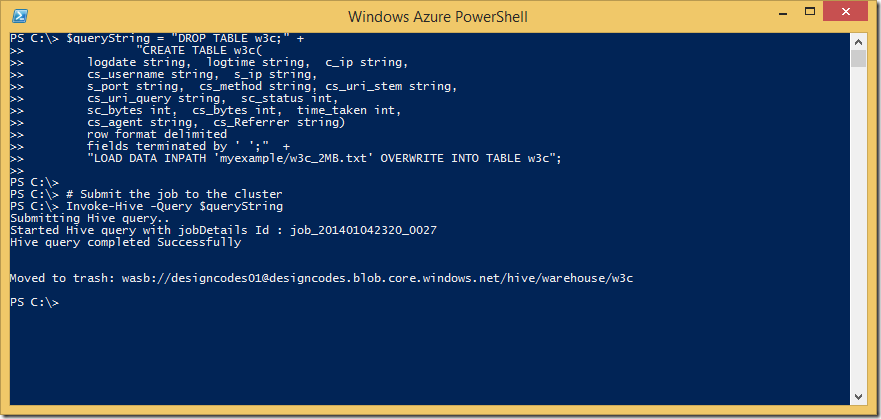

# HiveQL query $queryString = "DROP TABLE w3c;" + "CREATE TABLE w3c( logdate string, logtime string, c_ip string, cs_username string, s_ip string, s_port string, cs_method string, cs_uri_stem string, cs_uri_query string, sc_status int, sc_bytes int, cs_bytes int, time_taken int, cs_agent string, cs_Referrer string) row format delimited fields terminated by ' ';" + "LOAD DATA INPATH 'myexample/w3c_2MB.txt' OVERWRITE INTO TABLE w3c"; # Submit the job to the cluster Invoke-Hive -Query $queryString

Since we created internal table. the file will be deleted from its original location and moved to hive warehouse.

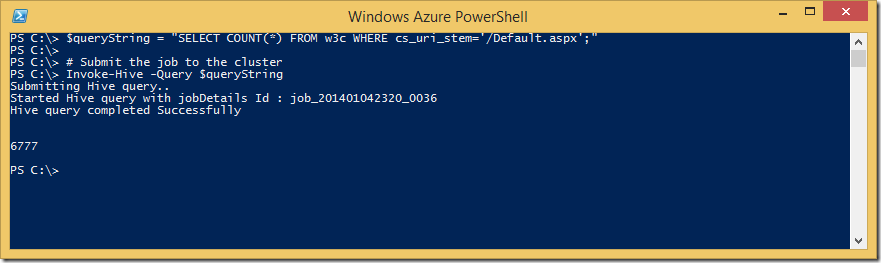

Query the Non-Partitioned Hive Table

The script bellow will count the number of rows where the column cs_uri_stem equals ‘/Default.aspx’

$queryString = "SELECT COUNT(*) FROM w3c WHERE cs_uri_stem='/Default.aspx';" # Submit the job to the cluster Measure-Command { Invoke-Hive -Query $queryString }

Create Partitioned Hive Table

The script bellow will create a new, partitioned Hive table name ‘w3c_partitioned’. Notice that comparing to the table ‘w3c’, cs_uri_stem is not a column, It’s a partition

$queryString = "DROP TABLE w3c_partitioned;" + "CREATE TABLE w3c_partitioned( logdate string, logtime string, c_ip string, cs_username string, s_ip string, s_port string, cs_method string, cs_uri_query string, sc_status int, sc_bytes int, cs_bytes int, time_taken int, cs_agent string, cs_Referrer string) PARTITIONED BY (cs_uri_stem string) row format delimited fields terminated by ' ';" # Submit the job to the cluster Invoke-Hive -Query $queryString

Insert data into the Partitioned Table

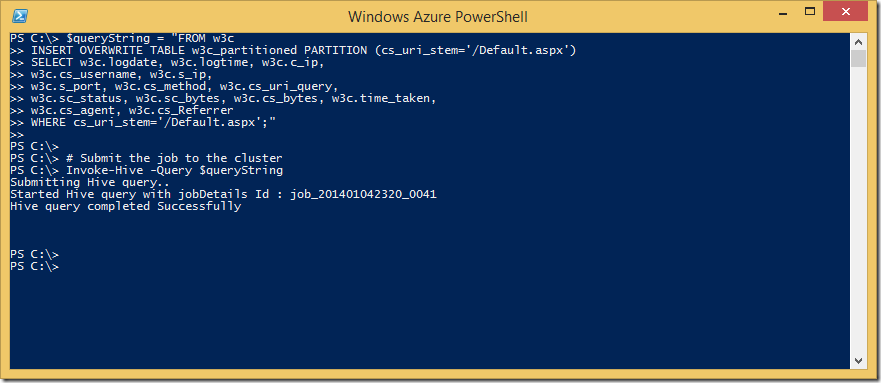

The following script will populate the partitions with data selected from the table w3c.

Notice that:

- We’re inserting the rows where the cs_uri_stem cell equals ‘/Default.aspx‘ into partition ‘cs_uri_stem='/Default.aspx‘.

- We’re inserting the rows where the cs_uri_stem cell equals ‘/Info.aspx‘ into partition ‘cs_uri_stem='/Info.aspx‘.

- We’re inserting the rows where the cs_uri_stem cell equals ‘/UserService‘ into partition ‘cs_uri_stem='/UserService‘

$queryString = "FROM w3c INSERT OVERWRITE TABLE w3c_partitioned PARTITION (cs_uri_stem='/Default.aspx') SELECT w3c.logdate, w3c.logtime, w3c.c_ip, w3c.cs_username, w3c.s_ip, w3c.s_port, w3c.cs_method, w3c.cs_uri_query, w3c.sc_status, w3c.sc_bytes, w3c.cs_bytes, w3c.time_taken, w3c.cs_agent, w3c.cs_Referrer WHERE cs_uri_stem='/Default.aspx';" # Submit the job to the cluster Invoke-Hive -Query $queryString $queryString = "FROM w3c INSERT OVERWRITE TABLE w3c_partitioned PARTITION (cs_uri_stem='/Info.aspx') SELECT w3c.logdate, w3c.logtime, w3c.c_ip, w3c.cs_username, w3c.s_ip, w3c.s_port, w3c.cs_method, w3c.cs_uri_query, w3c.sc_status, w3c.sc_bytes, w3c.cs_bytes, w3c.time_taken, w3c.cs_agent, w3c.cs_Referrer WHERE cs_uri_stem='/Info.aspx';" # Submit the job to the cluster Invoke-Hive -Query $queryString $queryString = "FROM w3c INSERT OVERWRITE TABLE w3c_partitioned PARTITION (cs_uri_stem='/UserService') SELECT w3c.logdate, w3c.logtime, w3c.c_ip, w3c.cs_username, w3c.s_ip, w3c.s_port, w3c.cs_method, w3c.cs_uri_query, w3c.sc_status, w3c.sc_bytes, w3c.cs_bytes, w3c.time_taken, w3c.cs_agent, w3c.cs_Referrer WHERE cs_uri_stem='/UserService';" # Submit the job to the cluster Invoke-Hive -Query $queryString

Looking into the partitioned table in hive warehouse, you should notice that a dedicated folder has been added for each partition

Query the Partitioned Hive Table

The script bellow will query the table w3c_partitioned for the number of rows where the column cs_uri_stem='/Default.aspx' .

$queryString = "SELECT COUNT(*) FROM w3c_partitioned WHERE cs_uri_stem='/Default.aspx';"

# Submit the job to the cluster

Invoke-Hive -Query $queryString

Since the table is partitioned by the column cs_uri_stem, instead of scanning the entire data-set, Hive is scanning only the partition '/Default.aspx‘.

Delete the tables

$queryString = "Drop table w3c;Drop table w3c_partitioned;"

# Submit the job to the cluster

Invoke-Hive -Query $queryString