Since system quality depends on component quality, any defective component causes a ripple effect throughout systems that include that component. Hence, validation and quality control of isolated components is crucial for producing quality systems. In order to validate component quality, we must follow a cost effective test process and implement a rigorous quality process for all generated software components.

Due to the lack of standardized requirements for testability on reusable components, it’s not unusual that components are designed with poor testability support that results in a major increase in the cost of component validation and evaluation. According to our experience with software component testing, any shortage in testability support leads to an increase in test complexity, reduction in test coverage, and heavy reliance on test tools and frameworks, which all together increase the cost of component testing and damage the system quality overall.

Poor testability also indicates that the software test process is ineffective. Like other requirements and design faults, poor testability is expensive to repair when detected late in the software development process. Therefore, we should pay attention to software testability by addressing and verifying all software development artifacts in all development phases, including requirements analysis, design, implementation, and testing.

In this paper, we’ll list a selection of design smells (violation of principles) that result in poor support for component testability, and present the suggested solutions.

Testability requirements

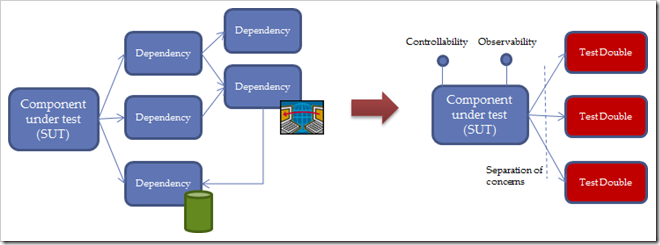

In order for a system to provide the required testability, it needs to be designed in such way that test code (or any external code) will be able to:

- Replace the dependencies of the component under test with test doubles (Separation of Concerns)

- Control the inputs of the component (Controllability)

- Read the relevant outputs to verify that a particular input resulted in the desired outcome (Observability)

- Determine the exact side effects that occurred as a result of applying inputs to the component. So for instance, if test code can initiate an asynchronous operation, it has to be able to wait for the operation to complete.

Testability Killers Overview

The basic instinct of many object oriented designers is to produce un-testable code. Here’s a selection of the most noticeable misconceptions that result in poor support for component testability.

- Hiding dependency instantiation: Hiding the instantiation of dependency components in the internal implementation of the component under tests.

- Exposing component through concrete type reference: Exposing a component through a concrete class reference rather than through a well-defined interface.

- Using global state: Making excessive use of global static fields and/or singletons, in order to share state and establish communication between components.

- Breaking the Law of Demeter: Breaking the Law of Demeter (or ‘the Principle of Least Knowledge’) by injecting a component with richer interfaces than it really needs.

- Preventing access to internal state/functionality: Taking encapsulation to the extreme by exposing component functionality/state in an ad hoc manner and hiding every piece of functionality/state that is not used by other APIs.

Suggested solutions

The first four testability killers are relatively simple and can be solved simply by adopting the following suggestions, which in addition to eliminating the testability killers result in an extra benefit of improving the component API. The 5th testability killer however needs special attention since it’s the source for the biggest tension between testability and pure object oriented design.

Hiding dependency instantiation

Finding error (or errors) in an integrated module is much more complicated than first isolating the components, testing each, then integrating them and testing the whole. However, in order to isolate a component, we need to be able to replace its dependencies with test doubles.

Respectively, a component should never hide the instantiation of dependencies that need to be replaced with test doubles during tests. The best solution is to inject the component with all its dependencies (dependency injection), allowing test code to prevent the instantiation of the real dependencies, and replace them with test doubles.

When using dependency injection, constructor Injection is a preferred choice since it simplifies the component instantiation process and provides more encapsulation by eliminating the need to expose getter and setter properties for dependencies.

In the following example, Class A hides the instantiation of classes B and C, making it impossible for test code to replace B and C with mocks.

1: public class A

2: {3: private readonly IB m_b;

4: private readonly IC m_c;

5: 6: public A()

7: {8: m_b = new B();

9: m_c = new C();

10: } 11: } 12: 13: [TestMethod]14: public void TestA()

15: {16: IB bMock = new Mock<IB>().Object;

17: IC bcock = new Mock<IC>().Object;

18: 19: var a = new A();

20: // ??? We can't replace B and C!

21: }By using dependency injection (applying constructor injection), test code can replace the dependencies with great ease.

1: public class A

2: {3: private readonly IB m_b;

4: private readonly IC m_c;

5: 6: 7: public A(IB b, IC c)

8: { 9: m_b = b; 10: m_c = c; 11: } 12: } 13: 14: [TestMethod]15: public void TestA_DI()

16: {17: IB bMock = new Mock<IB>().Object;

18: IC bcock = new Mock<IC>().Object;

19: 20: var a = new A(bMock, bcock);

21: }Dependency injection vs. Encapsulation

Exposing the dependencies in a constructor function is arguably violation of encapsulation, because now component dependency objects are exposed through the constructor function rather than being hidden in the component internal implementation.

When components are instantiated manually, using constructor injection breaks encapsulation since there’s a chance that the components users will inject them with dependencies with wrong data, which will bring them into invalid or inconsistent state. However, when components are instantiated by a DI container and exposed through interfaces , their users are not aware of the constructor function thus their dependencies are considered hidden (despite the fact that they appear in the constructor).

Exposing components as concrete type references

Every reusable component should expose a well-documented interface, referred to as application interface, through which, and only through which, other components could interact with the component. Given that a component is injected with its interface-based dependencies, test code can replace the dependencies with test doubles with great ease.

Additionally, we need to make sure that external components provided by other frameworks (that we cannot modify) are not shared through a concrete type reference. A simple solution is to wrap each external component in a dedicated class (adapter) that implements a well-defined interface, and expose the wrapper class it in such way that its implementation can be replaced using a test double.

Let’s see how we can share the CompositionContainer class provided by the ‘Managed Extensibility Framework’ (MEF) through a well-defined interface, which will allow test code to replace all calls to the CompositionContainer with alternative implementations.

Here’s the wrapper class, as you can see, it implements ICompositionContainer interface and delegate incoming calls to the CompositionContainer class.

1: public interface ICompositionContainer

2: {3: void SatisfyImports(object o);

4: void ComposeParts(params object[] attributedParts);

5: } 6: 7: public class CompositionContainerWrapper : ICompositionContainer

8: {9: private readonly CompositionContainer m_container;

10: 11: public CompositionContainerWrapper()

12: {13: m_container = new CompositionContainer(m_aggregateCatalog);

14: 15: } 16: 17: public void SatisfyImports(object o)

18: { 19: m_container.SatisfyImportsOnce(o); 20: } 21: 22: public void ComposeParts(params object[] attributedParts)

23: { 24: m_container.ComposeParts(attributedParts); 25: } 26: }Workarounds

Mock frameworks such as Moq and RhinoMocks that are based on Castle technologies (for proxy generation) can be used to create mocks for concrete types. However, they only support overriding virtual methods and interfaces and does not prevent from instantiating the real dependencies.

Other frameworks such as TypeMock that are based on profiler APIs (uses COM in order to intercept calls and support replacing IL code) introduce support for replacing non-virtual methods.

Here’s how you replace the CompositionContainer with a dummy (null) test double using TypeMock:

1: var fake = Isolate.Fake.Instance<CompositionContainer>(Members.ReturnNulls);However, TypeMock requires a license and in many cases makes the test code un-readable and harder to maintain as the system grows. Using mock frameworks like TypeMock is recommended for legacy code, but for new code design for testability and designing the right test doubles if preferred.

Using global state

As much as possible, we should avoid using global state and singletons as they lead to inconsistency and misleading APIs that are hard to reason upon thus hard to test. Again, instead of using singletons to establish communication between components, we should inject the component with its dependencies.

The problem with singletons

In the following test we want to validate that we can successfully deposit money to a BankAcount object. Here’s the code when using singletons.

1: [TestMethod]2: public void TestDeposit()

3: {4: const int userId = 982;

5: 6: // Initialize BankAcount dependencies...

7: SystemConfiguration.Instance.LoadAll(); 8: DatabaseManager.Instance.Init(); 9: 10: var bankAcount = new BankAcount(userId);

11: 12: // Scenario

13: bankAcount.Deposit(Money.FromDolars(500)); 14: 15: // Verify

16: Money money = bankAcount.Money; 17: 18: Assert.AreEqual(1500, money.Dolars); 19: }The problem with this design is that test code (or any code for that matter) need to know which singletons need to be initialized and in which order in order to successfully instantiate and test the BankAcount object. With this design, we get an illusion that the BackAccount has no dependencies since it has clean constructor that will stay clean even if 100 more singletons will be used by the class internally. Additionally and most importantly, singletons cannot be replaced by test doubles.

The solution is to inject the BankAcount class with all its dependencies through its constructor (preferred) or through its setters. Here’s the code

1: [TestMethod]2: public void TestDepositDI()

3: {4: const int userId = 982;

5: 6: // Initialize BankAcount dependencies...

7: var systemConfiguration = new SystemConfiguration();

8: var databaseManager = new DatabaseManager(systemConfiguration);

9: 10: var bankAcount = new BankAcount(userId, databaseManager);

11: 12: // Scenario

13: bankAcount.Deposit(Money.FromDolars(500)); 14: 15: // Verify

16: Money money = bankAcount.Money; 17: 18: Assert.AreEqual(1500, money.Dolars); 19: }Now, the BankAcount API is self-described, we can’t go wrong since it cannot be constructed without all of its dependencies. Additionally, test code can simply and easily replace any of its dependencies with test doubles.

Here’s how you replace a singleton with a fake test double using TypeMock:

1: var fake = Isolate.Fake.Instance<DatabaseManager>(); 2: 3: // Here we are changing the singleton behavior..

4: Isolate.WhenCalled(() => fake.CalculatePrice(0)).WillReturn(100); 5: 6: Isolate.Swap.AllInstances<DatabaseManager>().With(fake); 7: The problem with global state

When using global (static) state, multiple execution of the same code can result in different result. This is disastrous for testing since it make it harder to isolate tests such that they will not depend on one another and will be able to run at any order, as a group or independently.

Breaking the Law of Demeter

According to the Law of Demeter (LoD), each unit should have only limited knowledge about other units. The interpretation of the law is that object A cannot “reach through” object B to access yet another object, as doing so would mean that object A implicitly requires greater knowledge of object B’s internal structure. Additionally, an object should avoid invoking methods of a member object returned by another method; this can be stated simply as "use only one dot". That is, the code "a.b.Method()" breaks the law where "a.Method()" does not.

From test perspective, breaking the LoD forces the creation of redundant dependencies or test doubles when coming to test the class in isolation.

Consider the following code:

1: interface IContext

2: { 4: 5: TransactionManager Manager { get; } 6: } 7: 8: class Mechanic

9: {10: readonly Engine m_engine;

11: 12: Mechanic(IContext context) 13: { 14: m_engine = context.Engine; 15: } 16: 17: public void Fix()

18: { 19: RepairExecutor.Repear(m_engine); 20: } 21: }Notice that the Mechanic class is injected with a Context class that is used only for retrieving the Engine instance. The Mechanic does not care for the Context. You can tell because Mechanic does not store the reference to Context. Instead the Mechanic traverses the Context and looks for what it really needs, the Engine.

Here’s the test code for the Mechanic class:

1: [TestMethod]2: public void TestMechanic()

3: {5: var configuration = new SystemConfiguration();

4: var engine = new Engine();

6: var databaseManager = new DatabaseManager(configuration);

7: var transactionManager = new TransactionManager(databaseManager);

8: var context = new Context(transactionManager, engine);

9: var mechanic = new Mechanic(context);

10: 11: mechanic.Fix(); 12: 13: // Test result...

14: }As you can see, using the Context class didn’t come cheap…we had to instantiate (or mock) all its dependencies as well.

Here’s a Mechanic class that follows the LoD

1: class Mechanic

2: {3: readonly Engine m_engine;

4: 5: public Mechanic(Engine engine)

6: { 7: m_engine = engine; 8: } 9: 10: public void Fix()

11: { 12: RepairExecutor.Repear(m_engine); 13: } 14: }Instead of injecting the Mechanic class with the Context class, we injected it with only what it needs, i.e. the Engine.

Here’s the test code:

1: [TestMethod]2: public void TestMechanic_Lod()

3: {4: var engine = new Engine();

5: var mechanic = new Mechanic(engine);

6: 7: mechanic.Fix(); 8: 9: // Test result...

10: }Preventing access to internal state

As you can see, lots of testability headaches can be solved simply by applying the dependency injection (DI) pattern and yielding to the Law of Demeter (LoD).

However, using DI and LoD alone don’t satisfy all testability demands. In order to test components in isolation effectively and efficiently – test code need to be provided with access to component internal state and internal functionality that the component doesn’t expose to its non-test consumers.

This testability requirement is the source for the biggest tension between testability and pure by-the-book object oriented design. The problem is that good object oriented design preaches for encapsulation and information hiding while test code often requires access to component internal state that is not exposed in favor of strict encapsulation.

Since encapsulation is vital both for preventing users from setting component internal data into an invalid or inconsistent state and for limiting the interdependencies between software components – it’s crucial that we’ll find a way to keep the component encapsulated and still provide effective ways for test code to gain access to the required state and functionality.

A first aid solution is to relax encapsulation where it’s possible to do so without allowing external code to bring the component into invalid or inconsistent state. Hiding functionality as much as possible and exposing functionality in an ad hoc manner is maybe an easy way to guaranty encapsulation but it’s not the right design to promote testability.

If it’s impossible to expose the required functionality while preserving the component invariants, the component should provide a built-in interface, called component test interface, to support external interactions for software testing. In order to prevent non-test components from mistakenly use the test interfaces, the test interfaces should be implemented explicitly by the component, via private methods, such that external code will not be able access them without casting the component to the test interface.

Let’s consider a case where we need to test a component (called DataProvider) that its main functionality is to command/query a remote web service. Here’s the code for the component under test:

1: public class DataProvider

2: {3: private readonly WebDataService m_service = new WebDataService();

4: 5: public Item[] GetItems()

6: {7: return m_service.GetItems();

8: } 9: 10: public void AddItem(Item item)

11: {12: m_service.BeginAddItem(item, ar => { }, null);

13: } 14: 15: public void Save()

16: {17: m_service.BeginSaveChanges(OnSaveCompleted, null);

18: } 19: 20: private void OnSaveCompleted(IAsyncResult ar)

21: { 22: m_service.EndSaveChanges(ar); 23: }Here’s the test case:

- Set the flag IgnoreResourceNotFoundException on the web service to true

- Add item with Id=982

- Save changes

- Check that the item has been added successfully

The problems with implementing the test case through DataProvider public interfaces are as follows.

- The DataProvider doesn’t provide mechanism to wait for the Save call to complete

- The DataProvider doesn’t expose the web service so IgnoreResourceNotFoundException cannot be modified.

Let’s try to construct a test:

1: [TestMethod]2: public void TestDataProviderAddItem()

3: {4: var dataProvider = new DataProvider();

5: 6: // ??? Set the flag IgnoreResourceNotFoundException to true

7: 8: // Add item with Id=982

9: var itemToAdd = new Item(982);

10: dataProvider.AddItem(itemToAdd); 11: 12: // Save changes

13: dataProvider.Save(); 14: 15: // ??? wait for the save operation to complete

16: 17: // Check that the item has been added successfully

18: var items = dataProvider.GetItems(); 19: Assert.AreEqual(1, items.Length); 20: Assert.AreEqual(itemToAdd, items[0]); 21: }So what can we do?

We can develop a workaround solution for waiting for the save operation to complete by looping around until GetData() will return the new item, but that raises some difficult questions such as:

- How long to wait?

- If the save operation fails, how can we know why? Where is the exception?

- How can we check that item hasn’t been added? Should we wait the entire timeout?

The simple solution is to add a public SaveCompleted event that will raised when the save operation complete, and to expose IgnoreResourceNotFoundException property that will delegate changes to the web service.

Adding SaveCompleted event will not break the component encapsulation and will not introduce new risks. In fact, a good practice to enhance testability is to expose completion monitoring support for every public a-synch operation. However, exposing properties such as IgnoreResourceNotFoundException is out of the question as it is breaking encapsulation and can be very confusing for the component users.

In order to expose IgnoreResourceNotFoundException without breaking encapsulation, we can have the DataProvider implement a test interface called IDataProviderTesting in an explicit manner, and expose the IgnoreResourceNotFoundException property through it.

Here’s the DataProvider revised:

1: public class DataProvider : IDataProviderTesting

2: {3: private readonly WebDataService m_service = new WebDataService();

4: 5: public event EventHandler<SaveCompletedEventArgs> SaveCompleted;

6: 7: bool IDataProviderTesting.IgnoreResourceNotFoundException

8: {9: set { m_service.IgnoreResourceNotFoundException = value; }

10: } 11: 12: public Item[] GetItems()

13: {14: return m_service.GetItems();

15: } 16: 17: public void AddItem(Item item)

18: {19: m_service.BeginAddItem(item, ar => { }, null);

20: } 21: 22: public void Save()

23: {24: m_service.BeginSaveChanges(OnSaveCompleted, null);

25: } 26: 27: private void OnSaveCompleted(IAsyncResult ar)

28: {29: try

30: { 31: m_service.EndSaveChanges(ar);32: if (SaveCompleted != null)

33: {34: SaveCompleted(this, new SaveCompletedEventArgs());

35: } 36: }37: catch(Exception e)

38: {39: if (SaveCompleted != null)

40: {41: SaveCompleted(this, new SaveCompletedEventArgs(e));

42: } 43: } 44: } 45: }Notice that IDataProviderTesting is implemented explicitly, i.e. the property IgnoreResourceNotFoundException is exposed as a private member.

Now test code can wait on the SaveCompleted event and change the IgnoreResourceNotFoundException property of the internal web service.

Here’s the test code with the revised DataProvider

1: [TestMethod]2: public void TestDataProviderAddItem_TestabilityInterface()

3: {4: var dataProvider = new DataProvider();

5: 6: // Get the testability interface

7: IDataProviderTesting dataProviderTesting = dataProvider; 8: 9: // Set the flag IgnoreResourceNotFoundException on the web service to true

10: dataProviderTesting.IgnoreResourceNotFoundException = true;

11: 12: // Add item with Id=982

13: var itemToAdd = new Item(982);

14: dataProvider.AddItem(itemToAdd); 15: 16: bool saveCompleted = false;

17: dataProvider.SaveCompleted += (sender, args) => { saveCompleted = true; };

18: 19: // Save changes

20: dataProvider.Save(); 21: 22: // Wait for the save operation to complete

23: Waiter.Wait(() => saveCompleted, TimeSpan.FromSeconds(10)); 24: 25: // Check that the item has been added successfully

26: var items = dataProvider.GetItems(); 27: Assert.AreEqual(1, items.Length); 28: Assert.AreEqual(itemToAdd, items[0]); 29: } 30: }Workarounds

There are a number of approaches for supporting testability without exposing the component internal state or implementing test interfaces explicitly. The component can write outputs to traces to allow test code to verify the outputs by inspecting the log, software contract post conditions can be used to provide some observabilty by validating internal state and throwing exceptions that can be captured by the test code, we can change private members encapsulation to protected and use sub classes that will expose the desired state, and we can use reflection to pull out internal state.

Sadly, all of the above will lead to awful test writing experience and unhealthy dependency on the component implementation. Relying on traces and analyzing logs is confusing and messy, contract are great for class level testing but not applicable for component level testing, changing the encapsulation to protected requires unreasonable modification to the component and is still considered as breaking encapsulation and using reflation (magic strings) will lead to unmaintainable/breakable test code.

Conclusion

The benefits of object-oriented development are threatened by the testing burden that encapsulation and information hiding place upon objects. In terms of both the ease of testing and the value of testing, object oriented software has been demonstrated to have lower testability than procedural implementations. To address these concerns, we need to eliminate the testability killers already at the design stage.

This paper outlined some practical approaches for increasing testability while keeping the system properly encapsulated and improving the components API.

In a nutshell, in order to eliminate the most painful testability killers, we should adhere to the following principles:

- Program to an interface

- Use dependency injection (prefer constructor injection)

- Avoid using global state

- Yield to the Low of Demeter

- Relax encapsulation without breaking invariants

- Expose testability interfaces (implemented explicitly)

Yet another well written and informative article!

ReplyDeleteThanks for posting, this is truly a great read. I wish that all the devs will follow this - it will save a lot of time and money and will produce more reliable systems.

ReplyDelete