Every developer that spent enough time with concurrent applications must have learned that producing a reliable, high performance application that scales gracefully to many core hardware is extremely difficult to do.

In order for the application to scale and maintain high throughput, we have to design the threading model such that at any given time the application will produce the optimal amount of threads that will put the maximum number of cores to work with minimum contentions. In order to keep the amount of contentions low, besides of not over introducing threads, it’s crucial that we’ll find an efficient way to protect the data that is being shared among the threads from concurrent access.

In order to make parallel programming easier, .NET framework 4.0 introduced the TPL (task parallel library) together with new parallelism constructs that facilitated the process of adding concurrency to applications. However, although more and more code is tempted to run in parallel, we get no real help from the runtime with protecting the shared data from concurrent access. Applications that require coarse-grained isolation can get away relatively easy by utilizing techniques such as message passing, which defers sharing and eliminate the need for locks almost entirely. However, in order to achieve greater scalability there’s not escape from using fine-grained locks, and so the problems begin…as we’ll find in the next chapter.

In an attempt to provide a solution for data sharing that on one hand scales, and on the other hand easy to use and less error prone than fine grained locks – Microsoft is working on a transactional memory (STM) support for .NET (first draft that works on vs2008 is available for download) that should ease the tension between lock granularity and concurrency. With STM, instead of using multiple locks of various kinds to synchronize access to shared objects, we simply wrap all the code that access those objects in a transaction and let the runtime execute it atomically and in isolation by doing the appropriate synchronization behind the scenes.

The great benefit that STM provides is that we can write code that looks sequential, can be reasoned about sequentially, and yet run concurrently in a scalable fashion.

Within sequential code blocks (or ones that can be reasoned bout sequentially) we can read/write into shared memory without being concerned about other threads changing it in the middle, and without worrying that changes we made will cause other threads to see invalid intermediate state.

How easy it really is? Studies have shown that developers find transactional memory slightly harder to use than coarse locks and far easier to use than fine-grained locks.

What about performance? Benchmarks (provided by the programming guide) show that some simple to construct STM.NET based implementation scales nearly as good as expert hand-tuned implementation that utilizes fine-grained locks. However, although STM promises to retain excellent scalability characteristics (by automating fine-grain locking), it still comes with significant sequential overheads when running on few cores. Hopefully, further optimizations, customized memory model and most importantly hardware support will result in better performances in future versions.

It’s important to note that no one (including the designers of STM) really knows how STM overhead will affect real world applications, developed by real world developers, this issue is still being investigated, and that is the main reason why STM for .NET has been made available.

What about hardware support? Sun Microsystems developed multi-core processor called Rock, which introduced hardware support for transactions of limited size, and considered a ‘modest first step in integrating hardware transactions (HTM) support into a mainstream commercial multi-core processor’. Sadly, after 4 years of research the Rock project was canceled.

Does STM scale unlimitedly? STM scalability has been put into question by many research papers. Some argued that it will never be more than a “research toy”, others that it can scale only up to a point. All in all, the most significant scalability bottleneck associated with STM is the outcome of the so-called ”privatization problem’ (will be explained latter on) that forces STM to ensure that transactions will retire from commit processing in the same order they entered, fact which naturally has major effect on the ability of STM to scale unlimitedly.

Looking at the benchmarks (provided by the programming guide) it seems that in non read-only implementations STM (without the TVAR optimization that eliminates the need for commit ordering, will be explained latter on) stop scaling some where near 8 threads, but again, there are still many optimizations ahead.

Any other STMs? Haskell’s implementation for STM has been publicly available for several years, it has been used in production systems and finally produced programs which were not written by the language implementers. Since Haskell is a functional language that support impure operations (which read/write to shared memory) separated from ordinary pure operations by the type system - STM fits into it in a much more elegant way than will ever be possible in an imperative language such as .NET.

In fact, Haskell was used as a kind of “laboratory” to study the ideas of transactional memory in a setting with a very expressive type system.

Why just now?? Concurrent programming has already been around for some 30 years and has already experienced gradual growth. However, only with the spreading of many core machines it become clear that we need alternative approach for lock based programming that is simpler, and less error prone.

When the official release? Apparently, there’s a lot of work that need to be done before STM.NET can be included in an official release (if ever), anyway, according to the dev team it will not be part of the official release of .NET Framework 4.0.

Locking Hazards

Nowadays, the dominant synchronization model is based on locks. Some locks grant exclusive access to only one thread, others grant shared access to readers and exclusive access to writers, there’s the immutability approach where objects are designed as read-only and cloned on every write, thus no lock is required on read, and the list goes on and on. As mentioned above, making the right decision regarding to which locking scheme to use is a difficult task that can make the different between application that scales and application that performs poorly and doesn’t scale. The post ‘Thread Synchronization in .NET’ provides in detail review and examines the pro and cons of many of the locking schemes.

Races, Deadlocks and the like

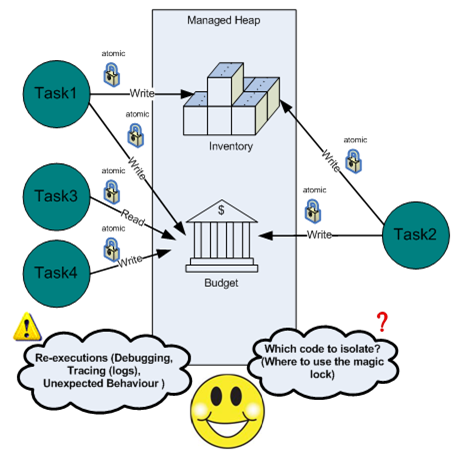

Consider a case where two tasks (Task1 and Task2) that run concurrently with each other read and write into inventory and budget objects. Since allowing a thread to write into an object while another is reading or writing into it is a recipe for erratic application behavior and possibly even an access violation - we have to match a locking scheme to every piece of code that access the inventory and budget objects.

In our case, since Task1 writes into the inventory object and task2 reads from it, in case the reads are frequent and the writes are infrequent and of short duration - we might decide to use the reader-writer-lock scheme to synchronize access to the inventory object. And since both tasks write into the budget object – we most likely to do the required synchronization using the one-in-a-time monitor lock .

While implementing the synchronization model, we have to make sure that locks are being acquired whenever they need to be acquired, or else we quite possibly encounter a race condition, which is possible even if we use only one lock.

Consider a case where Task2 didn’t acquire a reader lock while traveling though the products collection of the inventory object. In such case, if Task1 will happen to modify the collection (e.g adding or removing a product) while Task2 is traveling though it, Task2 is likely to experience ‘index out of range’ or ‘collection was modified’ exception.

When using multiple locks, we have to make sure that the threads acquire locks in the same order, or else we might encounter a deadlock.

Consider the following scenario: Task1 acquire LockA, then Task2 acquire LockB, then Task1 acquire LockB, now Task1 have to wait for Task2 to release LockB, then Task2 acquire LockA, now Task2 have to wait for Task1 to release LockA which will never happen. This would’ve never happened if Task1 and Task2 bothered to acquire the locks in the same order.

The Composition Problem

Races, deadlocks and the like aside, perhaps the most fundamental objection associated with locks is that they do not compose, i.e. abstractions that expose thread safe operations may produce correct results when used independently, but expose invalid intermediate state when combined.

Consider an application that contains two hashtables with thread-safe ‘add’ and ‘remove’ operations.

1: public class MultiWriteHashtable

2: {3: Hashtable m_hashtable = new Hashtable();

4: 5: object m_hashLock = new object();

6: 7: public void AddSafe(object key, object value)

8: {9: lock (m_hashLock)

10: {11: m_hashtable.Add(key, value);

12: } 13: } 14: 15: public void RemoveSafe(object key)

16: {17: lock (m_hashLock)

18: { 19: m_hashtable.Remove(key); 20: } 21: } 22: 23: public object this[object key]

24: { 25: get 26: {27: // No need to lock, Hashtable is thread safe

28: // for use by multiple reader threads and a single

29: // writing thread

30: return m_hashtable[key];

31: } 32: } 33: 34: public object SyncRoot

35: { 36: get 37: {38: return m_hashtable.SyncRoot;

39: } 40: } 41: }The application need to support deleting item from one table and inserting it into the other, while the intermediate state (between the delete and the insert), where none of the hashtables contains the item, must not be visible to other threads.

The problem is that unless we’ll do evil things like break lock encapsulation, there is simply no way to satisfy this requirement.

The following code is obviously racy and likely to (some day) throw InvalidOperationException when executed in parallel.

1: public static void MoveProductNotSafe(int productKey,

2: MultiWriteHashtable source, MultiWriteHashtable destenation) 3: { 4: Product product = (Product)source[productKey]; 5: 6: source.RemoveSafe(productKey); 7: 8: // At this point other threads see intermediate state that conflict

9: // with business rules.

10: 11: destenation.AddSafe(productKey, product); 12: } 13: 14: public static Product GetProductNotSafe(int productKey,

15: MultiWriteHashtable table1, MultiWriteHashtable table2) 16: { 17: Product product; 18: product = (Product)table1[productKey]; 19: 20: if (product == null)

21: { 22: product = (Product)table2[productKey]; 23: } 24: 25: if (product == null)

26: {27: throw new InvalidOperationException("Neither tables contains an item");

28: } 29: 30: return product;

31: }This following code will do the job, unfortunately in the cost of breaking locks encapsulation. The fact is that as long as we allow threads to access the hastable abstractions directly, it's practically the best we can do.

1: public static void MoveProductEvilSafe(int productKey,

2: MultiWriteHashtable source, MultiWriteHashtable destenation) 3: {4: lock (ApplicationFacade.MoveProductAtomicLock)

5: { 6: Product product = (Product) source[productKey]; 7: 8: source.RemoveSafe(productKey); 9: 10: destenation.AddSafe(productKey, product); 11: } 12: } 13: 14: public static Product GetProductEvilSafe(int productKey,

15: MultiWriteHashtable table1, MultiWriteHashtable table2) 16: { 17: Product product; 18: 19: lock (ApplicationFacade.MoveProductAtomicLock)

20: { 21: product = (Product)table1[productKey]; 22: 23: if (product == null)

24: { 25: product = (Product)table2[productKey]; 26: } 27: 28: if (product == null)

29: {30: throw new Exception("Neither tables contains an item");

31: } 32: } 33: 34: return product;

35: }As you can see, we broke lock encapsulation by locking on a public object (MoveProductAtomicLock), which is always a bad idea since it makes us vulnerable to separately authored modules that don't necessarily know anything about our implementation and might use the same lock in a way that, if we’re lucky, will starve our threads, and if we’re not, will cause a deadlock.

Another noticeable issue which is a great example of the fact that locks do not support modular programming, is that every piece of code that access the hashtables must be aware of the MoveProductAtomicLock and lock on it in order to avoid seeing the undesired intermediate state.

To help us better understand, let’s consider an application that includes several ‘atomic locks’ similar to MoveProductAtomicLock. In order to avoid seeing intermediate states, different modules in the application will be required to know exactly which lock belongs to which data structure/s, and remember to use it whenever reading from the data structure/s. To obtain this knowledge, the latter modules must be aware of the internal implementation of the modules that wrote into the data structures.

To get a taste of just how hazardous locks can be, lets consider another solution that will also work, at least for a while…

1: public static void MoveProductBrutalSafe(int productKey,

2: MultiWriteHashtable source, MultiWriteHashtable destenation) 3: {4: lock (source.SyncRoot)

5: {6: lock (destenation.SyncRoot)

7: { 8: Product product = (Product)source[productKey]; 9: 10: source.RemoveSafe(productKey); 11: 12: destenation.AddSafe(productKey, product); 13: } 14: } 15: } 16: 17: public static Product GetProductBrutalSafe(int productKey,

18: MultiWriteHashtable table1, MultiWriteHashtable table2) 19: { 20: Product product; 21: 22: lock (table1.SyncRoot)

23: { 24: product = (Product) table1[productKey]; 25: 26: if (product == null)

27: { 28: product = (Product) table2[productKey]; 29: } 30: 31: if (product == null)

32: {33: throw new Exception("Neither tables contains an item");

34: } 35: } 36: 37: return product;

38: }In order to avoid the introduction of a new public lock (MoveProductAtomicLock), we fell into the SyncRoot trap and nearly broke all the laws associated with lock based synchronization. Again we broke lock encapsulation by locking on a public object (SyncRoot), and got ourselves exposed to races and deadlocks; and again we created a situation where every piece of code that access the hashtables must know that it has to lock on one of the tables SyncRoot it in order to avoid seeing the undesired intermediate state.

On top of all that, we made even more heretics mistake by allowing our public methods to lock on their input parameters, which is a really bad design! Public methods that are being invoked by god knows who, should never make assumptions that allow locking on the objects that they receive from the outside.

In addition, we acquired the SyncRoot object while another SyncRoot is being held, without using lock hierarchies of some sort to prevent possible deadlocks. In fact, even if we did use lock hierarchies, we were still exposed to the same hazard described above, i.e conflicting with code that we don’t control and won't necessarily know anything about our lock hierarchy.

Threads Coordination (Waiting and Signaling)

In some cases one or more threads need to wait for some condition to become true in order to carry on with their work. In such case, it’s common that the threads will wait on a kernel synchronization object called an "event" until another thread will satisfy the condition and release the event.

In the figure above multiple threads try to pull item from the products blocking queue, if the queue is empty, they wait on a ManualResetEvent until other thread puts item in the queue and signal the event. When that happened, all the waiting threads try to retrieve item from the queue, the 1st that succeed, continue with its work, the others wait for the next signal. The fact that when ManualResetEvent is signaled all the waiting threads are being released may result a lock convoy in cases where to many threads wait on the event.

An alternative approach is to use an AutoResetEvent, which release only one thread when it’s being signaled. A common issue associated with AutoResetEvent is that in some situations, due to an uncalculated race in the application, the event might be signaled twice before one of the threads managed to wait on it. In such case, one signal will be lost, which might result in a deadlock if an other waiting thread was counting on that particular signal.

It’s not uncommon that we’ll need to signal an event while holding a lock, matter which can be wasteful if the waking thread needs to acquire the lock that’s being held, because it will be awakened only to find out that it must wait again. This situation is called the two-step dance, which can extend far beyond just two steps if many locks and events are involved.

Summery

Race conditions, Deadlocks, Lock convoys, Lost of notification and Two-step dance, like many other problems associated with synchronization primitives, are known as so hazardous since they are hard to reason about and they surface only on true multiprocessor systems where the threads really do execute truly simultaneously and thus expose new classes of errors

STM.NET Overview

Wouldn’t concurrent programming be that much simpler if we could use one global lock to synchronize access to all the shared objects in the application? If that was the case we would’ve never encountered a deadlock and the chance to encounter a race would’ve become that much rare. It also would’ve been nice if we didn’t have to choose a locking scheme, no matter if the threads are reading or writing, if the reading is frequent, if the writing is infrequent or of short duration, we would always use the same type of lock.

The obvious problem with using a single one-at-a-time lock (such as Monitor) to protect all the shared objects in the application is that it will drastically reduce the concurrency of the application, as the threads that execute concurrently will always have to wait for each other to complete, even if they are accessing a completely different set of resources.

What we need is some kind of a magic lock that we could use widely whenever reading/writing into a shared object, without sacrificing the concurrency of the application.

STM is promised to provide us with such magical lock, using which we can protect the shared objects from concurrent access and still keep the application concurrent and scalable (not without some overhead).

With STM, instead of using locks, we surround the regions of code that access shared data with ‘atomic’ construct.

1: atomic

2: { 3: body... 4: }In turn, STM will execute the ‘body’ (will be referred to as ‘atomic region’) atomically and in isolation.

STM succeed to generate a single lock illusion and still maintain great scalability by utilizing an optimistic concurrency control technique, by which instead of acquiring a lock pessimistically letting only one thread enter the atomic region at a time, the runtime assumes that there is no contention and allows all the threads to execute atomic regions concurrently, it detects if the assumption is incorrect (transaction conflict) whenever a thread exists an atomic region, and if that’s the case, it rolls back changes that were made in the region and re-execute.

Transaction conflict occurs whenever one of the fields that was read inside one transaction has changed by other transaction, while the first transaction was running.

The cool thing about STM.NET is that instead of worrying about which lock and what kind of lock needs to be held while accessing a shared field, we only have to make sure that we access the field within an atomic region.

As explained in the previous section, even when using a single lock we are still exposed to race conditions (which could happen if we forget to take the lock), so on the same scale, if we access a shared field from outside of an atomic region we might encounter a race.

The Down Side

Unfortunately, not everything about STM is nice and rosy. The optimistic nature of STM including the re-execution scheme allows the execution of ‘zombies’, which are transactions that observed an inconsistent read-set but have yet to abort.

Since ‘zombies’ see invalid snapshot of the shared memory, locations accessed can cause unexpected behavior such as infinite loops, illegal memory accesses, and other run-time misbehavior.

In addition, there’re still some ‘zombies’ related questions that remained unanswered. For example, what about writing to the log from transactional context? do we really want to see traces from zombie transactions? and what about debugging? when breaking in transactional code, do we really want the debugger to step through zombie transactions?

STM.NET Characteristics

Isolation

With STM, in order to read/write into collection of products in a thread safe fashion - we’ll write the following code -

1: List<Product> m_products = new List<Product>();

2: 3: public void AddProduct(Product product)

4: {5: atomic

6: { 7: m_products.Add(product); 8: } 9: } 10: 11: public Product GetProduct(int id)

12: {13: atomic

14: {15: foreach (Product product in m_products)

16: {17: if (product.Id == id)

18: return product;

19: } 20: } 21: 22: return null;

23: }Which will achieve the same functionality as -

1: List<Product> m_products = new List<Product>();

2: 3: public void AddProduct(Product product)

4: {5: lock(ApplicationFacade.GlobalLock)

6: { 7: m_products.Add(product); 8: } 9: } 10: 11: public Product GetProduct(int id)

12: {13: lock(ApplicationFacade.GlobalLock)

14: {15: foreach (Product product in m_products)

16: {17: if (product.Id == id)

18: return product;

19: } 20: } 21: 22: return null;

23: }

Since STM guaranties that every piece of code that run in transactional context see consistent snapshot of memory - developers can reason about transactional code that as if it was sequential.

Composition

In the ‘Locking Hazards’ section we realized that lock-based programs do not compose, we spend a good deal of time reviewing the example with the two thread safe hashtables that worked great until we needed to delete item from one table and insert it into the other without exposing the intermediate state; we found that we simply can’t implement that requirement without inviting lock-induced deadlocks.

Since STM guaranties that modifications made in transactional context will not be viewed by other transactions, the composition problem become a non issue!

As the following code indicates, STM’s solution for composing the delete and the insert operations is dead simple.

1: private static void MoveProducts(int productKey,

2: MultiWriteHashtable source, MultiWriteHashtable destenation) 3: {4: atomic

5: { 6: Product product = (Product) source[productKey]; 7: 8: source.RemoveSafe(productKey); 9: 10: 11: destenation.AddSafe(productKey, product); 12: } 13: } 14: 15: private static Product GetProduct(int productKey,

16: MultiWriteHashtable table1, MultiWriteHashtable table2) 17: {18: atomic

19: { 20: Product product; 21: product = (Product) table1[productKey]; 22: 23: if (product == null)

24: { 25: product = (Product) table2[productKey]; 26: } 27: 28: return product;

29: } 30: }Need to remind you about races? deadlocks? lock hierarchies’? lock encapsulation? SynchRoot? All become a vague memories of a dark past.

Modularity

In the ‘Locking Hazards’ section we also realized that lock-based programs do not support modular programming. We saw that in some cases there’s no escape from sharing locks or using lock hierarchies of some sort, which as a result forces different modules to be aware of the internal implementation of each other.

With STM, since there are no visible locks, the questions of which locks to take, and in which order, simply do not arise. Fact which enable us to write programs in a fundamentally more modular way than we can with locks.

Failure Atomicity

STM provides a robust mechanism for handling failures by which when an exception escapes the boundaries of an atomic block, STM catches it, rolls back changes made in the block prior to the exception, and re-execute the transaction.

This approach is not written in stone, there are alternatives such as aborting the transaction in the default case and allowing the programmers to catch it and re-execute if that’s the desired behavior. Since STM has not been used widely by real world developers there’s no real knowledge regarding to what’s the common case or what the developers majority will expect to happen in case of an exception.

Threads Coordination

STM introduces the ‘retry’ statement which can be called from anywhere inside the transaction region, once called, the transaction rollback and re-execute.

When a transaction need to wait for a certain condition to become true, it simply checks the condition and retry if it is false. Once another transaction satisfies the condition, the waiting transaction stop retrying and continue with its work.

In order to avoid unnecessary spinning, STM evaluates the fields that were read before the retry, and only if one of them has changed it re-execute. This still doesn’t mean that the condition that caused it to retry will be satisfied when it is finally woken-up, but it does reduce by a large factor the amount of unnecessary spinning.

Let’s see how the ‘retry’ statement can assist in the implementation of a function that blocks until a product with a given ID is added to the produces collection

1: public List<Product> m_products = new List<Product>();

2: 3: public void AddProduct(Product product)

4: {5: atomic

6: { 7: m_products.Add(product); 8: } 9: } 10: 11: public Product PollProduct(int id)

12: {13: atomic

14: {15: foreach (Product product in m_products)

16: {17: if (product.Id == id)

18: return product;

19: } 20: 21: // In case no product with matching ID has been found, wait for change

22: // in the m_products collection and re-execute

23: retry;

24: } 25: }As you can see, using ‘retry’ the implementation of the blocking function is straight forward. All that we have to do is to look for a product with the requested ID, and in case no such product is found, we call retry, which in turn wait for another transaction to commit change to the product collection and re-execute.

I/O Support

A common issue with transactional memory is that not all operations are inherently transactional, mainly since they cannot be undone, or undoing them is unreasonably costly. An example of such operations are I/O bound operations like writing to the Console, sending data over the network, writing to a file and the like.

Some implementations of STM (like Haskell) simply don’t allow I/O in a transaction, others buffer the I/O operations and execute them one-by-one only after successful commit. STM.NET supports I/O operations mainly by enabling their postponement via Atomic.DoAfterCommit, which accept delegate that’s being executed only after a successful commit.

In Concurrent Haskell, STM actions (TVar) and pure computation (which don’t access shared memory) are divided from I/O actions by the type system, thus preventing programmers from calling I/O actions from transactional context and allowing only STM actions and pure computation is straight forward.

STM.NET Implementation

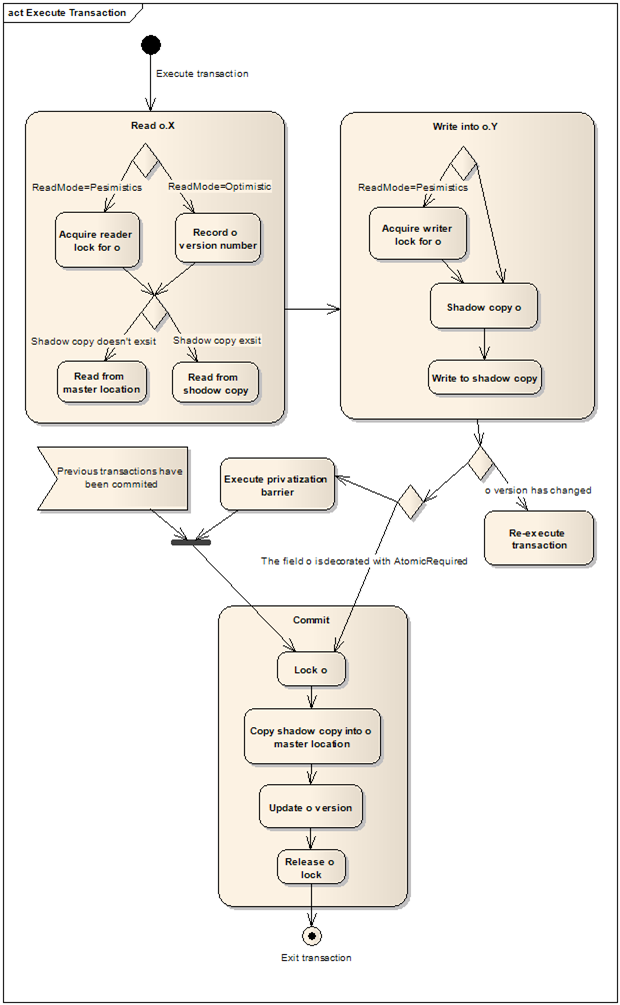

In order to guarantee that the objects inside the transaction are read and written into atomically and in isolation, when a piece of code executes in transactional context, the JIT generates a new version of the code that plugs in calls to STM internal APIs when ever appropriate. The new code logs the version of the objects that are being read (optimistic reads), and instead of writing into the objects master location, it generates shadow copies of the objects and writes the data into the shadow copies (buffered writes). Once the end of the transaction has been reached, the objects that have been read are probed for their current version number, if the version of one of the objects has changed since the 1st time it has been read, the shadow copies are thrown away and the transaction re-executes, otherwise, the shadow copies are copied into their master location and the version number of the objects that have been modified is incremented, all under the protection of fine grained locks (Commit-time locking).

Contention Management

In an attempt to reduce the conflict rate between a set of transactions, STM employs the use of a contention manager (CM) that detects conflicts and decides, according to a pre-defined policy, how long to wait before re-executing a conflicting transaction, and which read-mode technique to use. By default, when transaction starts, CM attempts to use the optimistic read technique described above, once it detects a conflict, it considers switching to a more pessimistic approach (using reader/writer locking scheme where conflicting transactions are delayed until a lock that they require is available) depending on the transaction size (defined by the reads count) and the re-execution count.

A naive optimistic read technique (that doesn’t include switching) with automatic execution schema can lead to starvation incases where a large transaction conflict with smaller transactions that execute periodically. In such case, the small transactions will always managed to commit changes that conflict with the large transaction, causing it to rollback and re-execute again and again.

Object Level Granularity

The CM validates reads at the object level and not at the object field level, meaning that if o.X was read by one transaction, and o.Y was written into by other transaction, as far as STM is concerned, the two transactions conflict.

Similarly, in case all the reads are successfully validated, STM copies the entire shadow copy into the master location, not only the fields that were modified. This can lead to a buried update in case one the fields of a certain object is updated from transactional context, and at the same time, other field in the same object is updated from non transactional context. When the transaction will commit, the entire copy will be copied into the master location, overriding the changes made in the non transactional context.

Commit Ordering (Supporting the Privatization Pattern)

The act of privatizing is done through associating a shared object with a flag that states whether or not the object is ‘Online’. Threads who wish to to write into the object can only do so if the flag is set to online (if it isn’t, they can wait for the flag to become online); threads who wish to read from the object, can do it freely from non-transactional context, without worry that it will be changed in the middle by first setting the online flag to false. Supporting the privatization pattern is widely considered to be important for providing an intuitive programming model and it’s especially encouraged when working with STM.

With STM, the implementation of the privatization pattern is straight forward. The readers change the flag from inside the transaction, and read from the object safely from the outside; the writers check the flag and write into the object inside the transaction, if a writer need to wait for the flag to become online, it can use the ‘retry’ statement mentioned above.

Lets see how the privatization pattern can be used to privatize the products collection, allowing multiple threads to travel through it from non transactional context, in a thread safe fashion.

1: public List<Product> m_products = new List<Product>();

2: private bool m_productsShared;

3: 4: public void AddProduct(Product product)

5: {6: atomic

7: {8: if (!m_productsShared) retry;

9: 10: m_products.Add(product); 11: } 12: } 13: 14: public Product GetProduct(int id)

15: {16: atomic

17: {18: m_productsShared = false;

19: } 20: 21: foreach (Product product in m_products)

22: {23: if (product.Id == id)

24: return product;

25: } 26: 27: return null;

28: }In order to allow multiple readers to travel through the produces list from non-transactional context, the product list is associated with the m_productShared flag that allows privatizing and publicizing the list. Threads can add item to the produces list only if it is published (i.e. the m_productsShared is true), if it isn’t, they retry until it is published. Threads that require traveling through the produces list can do it from non-transactional context by 1st privatizing the list (i.e setting the m_productsShared to false).

Since STM implements weak atomicity (or weak isolation), in which non-transactional memory accesses go directly to memory and bypass the STM access protocols, providing the privatization pattern could only be done by enforcing some sort of ordering on the order at which transactions commit.

Consider a case where a thread executes ‘AddProduct’, see that the products list is online (m_productsShared=true), add product to the list and start committing, than a 2nd thread start executing GetProduct, make the product list offline, and start committing. If STM will not guaranty that the 1st thread will complete the commit process before the second, we might encounter a situation where the 2nd thread finish its commit and start traveling through the collection from outside of the transaction, then the 1st thread will change x and finish its commit. In such case, the privatization pattern will be broken! and the 2nd thread will experience a ‘Collection was modified’ exception.

STM provides this ordering using a commit ticket protocol that serializes the commit processing of non-read-only transactions. This protocol essentially ensures that transactions are retired from commit processing in the same order they entered.

As you can imagine, serializing transactions commits can have drastic effect on the ability of STM to scale, which is the main motivation for its use. To sweeten the bitter pill, STM enables concurrent committing of read-only transactions, and transactions that only access fields that are accessible from transactional context (i.e fields that are decorated with the AtomicRequired attribute), optimization which is referred to as the “TVar optimization”.

Concurrent Haskell need not worry about the privatization problem, since it implements strong atomicity by allowing only STM actions to execute in transactional context, and disallowing STM actions to execute in non transactional context. Since Haskell’s STM actions (TVar) are divided from other actions by the type system, the implementation of strong atomicity in Haskell is straight forward (this is yet another reason why the purely functional core and monadic type system of Haskell fit so well to STM programming model).

In order to enable the privatization/publication pattern in strong atomicity surroundings, Haskell’s STM introduces the notation of ‘Dynamic separation’ which enable programmers to make explicit calls to STM in order to change the protection state of references from protected (shared between multiple threads and accessed only in transactions) to unprotected (inaccessible from transactions) and vice versa.

SkySTM implements weak atomicity, but presents an advanced privatization mechanism in which a privatizing transaction waits only for conflicting transactions, in contrast to STM.NET mechanisms, which require transactions to wait even for non-conflicting transactions. Thus, with SkySTM, if the application has few conflicts, the privatization mechanism does not impede its scalability.

Walk Through

To get a taste of STM internal operation manner, let’s see how STM execute the following transaction.

1: atomic

2: { 3: o.Y = o.X; 4: }As you can see, for a simple read/write operation STM introduces quite a bit of code. Let’s take the optimistic road – [Reading 0.X] STM records the version of o (the version is stored in a structure that STM associate with every object that participate in a transaction), reads the value of the field x from the master location (rather than from the shadow copy, since o hasn’t been written into yet in the current transaction). [Writing into 0.Y] STM copies o into a shadow copy and write the value of field x into the shadow copy. [Validation] STM checks if another committed transaction changed o by comparing the current version of o with the version that was recorded prior to the read, if o has been changed it re-execute, else it continues to the next stage. [Pre-Commit] Before staring the commit, in order to overcome the privatization problem described above, STM checks if all the fields that participated in the transaction (in this case only o) are marked as ‘Atomic Required’, if so, it jumps directly to the commit stage, else it waits until all previous non read-only transactions that are currently committing are done. [Commit] Finally, STM copies the entire shadow copy into o master location and update its version under the protection of a thin lock.

Alternative Implementations

Some implementations of STM use ‘in place writes’ technique instead of ‘buffered writes’. With ‘in place writes’, writes are made directly on the master location under the protection of pessimistic locks (encounter-time locking) and recorded into an ‘undo’ log. In case of a conflict, all the writes are rolled back using the ‘undo’ log. Sadly, the ‘in place writes’ scheme doesn’t support the privatization pattern.

TL2 (Transactional Locking 2) also uses ‘buffered write’, but instead of maintaining shadow copies which are copied on commit, it logs the writes in a write-set which is composed from address/value pairs, and copy each value to the attached address on successful commit.

Hardware Transactional Memory

However STM is starting to sound more and more appealing, it’s obvious that hardware transactions (HTM) implemented entirely in processor hardware will out perform any software based solution.

With HTM, writes are being stored in hardware registers and cache, in case of successful commit, they are being prorogated into main system memory, in case of transaction conflict, the cache lines holding the writes are being discarded. The great benefit of using the cache lines is that it allows HTM to employ the use of existing cache coherency protocols to detect conflicts between transactions!

The down side of HTM transactions is that they are limited to smaller transactions due to the size limitation of the cache, as appose to STM transactions that can handle large and longer transactions. That’s maybe the main reason why HTM and STM transactions must be able to operate together…

As mentioned at the top, Sun made an attempt to support a form of “best effort” hardware transactional memory in its multicore processor, code-named “Rock”. Rock processor has 16 cores, with each core capable of running two threads simultaneously, yielding 32 threads per chip! Unfortunately, the Rock project has been canceled.

To provide hardware transactions functionality, two new instructions were introduced (chkpt, commit) with one new status register (cps). The instruction chkpt is used to begin a transaction and commit to commit the transaction.

References

Joe Duffy's (brief) retrospective on transactional memory

Composable Memory Transactions (pdf)

Transactional Memory and concurrency Presentation (pdf)

> produced example programs which were not written by the language implementers

ReplyDeleteIt's an even stronger result in the Haskell world. Haskell's STM is used in production systems, not just "example programs". For example, at Galois.

(Written up here, http://www.galois.com/blog/2009/04/27/engineering-large-projects-in-haskell-a-decade-of-fp-at-galois/)

Thanks for the note, I will include this piece of detail in the post.

ReplyDeleteA great post!

ReplyDeleteThank you Aviad for reviling such interesting technologies, I wasn't aware of the transactional memory concept up until now, It sounds like a promising technology,

I guess that when 16/32/64/128 CPU machines will become main stream, the software industry will strive for enabling technology like STM,

Best regards and keep on providing us will such great reads!

Wow, great post Aviad!!

ReplyDeleteI have a question though,

When you described the privatization problem you mentioned that Haskell support strong atomically by not allowing TVar actions to be called from non transactional regions,

Maybe I am missing something but I don't understand how the privatization pattern can be implemented if you can't access TVar actions from non transactional code??

Great question!

ReplyDeleteIn order to enable the privatization/publication pattern in strong atomicity surroundings, STM introduces the notation of ‘Dynamic separation’ which enable programmers to make explicit calls to change the protection state of references from protected (shared between multiple threads and accessed only in transactions) to unprotected (inaccessible from transactions).

I didn't mention it since this post is more about STM.NET, Haskell's STM deserve its own post, but since you asked I will include it in the post.

Best Regards,