In the previous post we saw how applications that need to scale up can utilize the Task Parallel Library and the improved CLR 4.0 thread pool in order to optimize the scheduling of their massive amount of fine grained, short living tasks.

In this post we’ll dive through the implementation of the CLR thread pool in an attempt to understand the way in which it optimizes the creation and destruction of threads in order to execute asynchronous callbacks in a scalable fashion.

CLR Thread Pool v.s Win32 Thread Pool

The CLR thread pool is the managed equivalent of win32 thread pool (that is available since Windows 2000). Although they expose different APIs and differ in design, implementation and internal structure – the basic operation manner remained the same. Application code queue callbacks that the thread pool execute asynchronously in a scalable fashion through heuristic algorithm.

The main advantages of the CLR thread pool are that 1) it includes separate pool for ordinary callbacks (user callback, timers etc) and separate pool for asynchronous I/O (which maintains a process wide I/O completion port). And 2) it is aware of managed beasts such as the GC, that blocks the application threads during collection which can cause the unaware pool to create redundant threads.

Managed applications should use the CLR thread pool even on top of the Vista thread pool - that exposes richer API and performs a lot better than Win32 legacy pool. Also because the CLR thread pool is already used by many components that are integrated parts of the .NET Framework, such as Sockets, FileWriter/Reader, System/Threading timers and more.

How does the CLR Thread Pool Work? and Why should We Care?

There’s a lot of truth in the saying that most applications are better of being designed with no respect to the internal operation fashion of the thread pool (since it’s subject to change). However, it’s important for the developers to get familiar with the internals of the thread pool since 1) nobody likes black boxes managing the execution of their code, 2) it might convince some to neglect thoughts about creating a custom thread pool, and 3) wrong utilization of the thread pool can result in starvation (due to too many callbacks running together) or on extreme cases deadlocks (due to callbacks relying on each other to complete).

So...

Since the main overhead of asynchronous execution is the creation and destruction of threads - a key feature of the thread pool is to optimize the creation and destruction of threads.

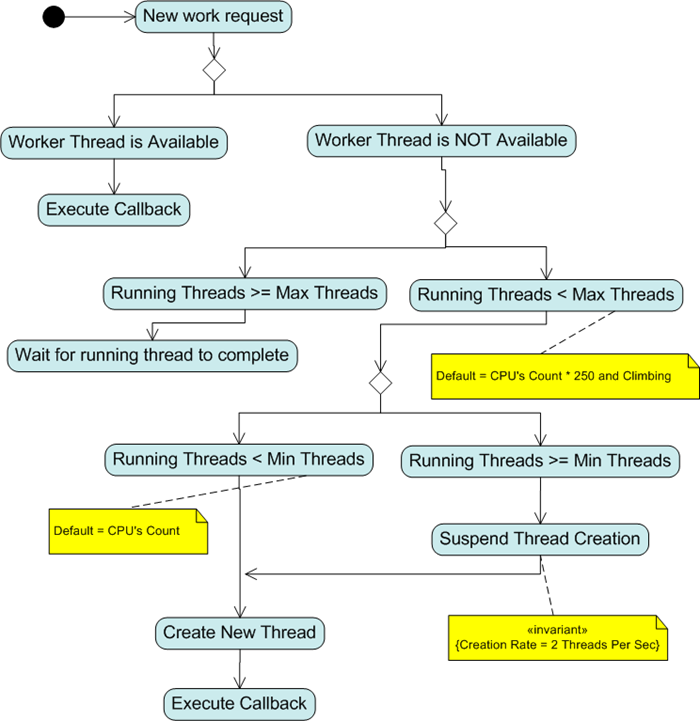

The basic operation manner of the thread pool is actually pretty easy to explain. The thread pool starts from 0 threads, it immediately creates new threads to serve work requests until the number of running threads reaches a configurable minimum (the default minimum is set to the number of CPUs on the machine).

While the number of running threads is equal or bigger than that minimum – the thread pool will create new threads at the rate of 1 thread per 0.5 second. Which means that if your application is running on a dual core machine, and 16 work requests that spans for 10 seconds are scheduled together, assuming the the thread pool is empty, the first two request will be served immediately, the third after 0.5 second, the forth after 1 second and the 16th after 7 seconds. In addition, to avoid unnecessary starvation a demons thread is running in the background and periodically monitors the CPU – in case of low CPU utilization it creates new threads as appropriate.

The thread pool will not create new threads after it reaches a configurable maximum. The default maximum is set to 250 * number of CPUs, which is 1o times more than it was in the 1.0 version of the .NET Framework. The default maximum was increased in order to reduce the chance for possible dead lock that can occur when callbacks rely on each other in order to complete.

After a thread finish its work it is not being destroyed immediately, rather it stays in the pool waiting for another work to arrive. Once new work arrive it is being served immediately by one of the waiting threads. The waiting threads are being destroyed only after spending 10 seconds (was 40 seconds) on the pool doing nothing.

The following figure shows how the ‘thread injection and retirement algorithm’ of the thread pool works.

What the Future Holds?

In the 4.0 version of the CLR thread pool - the ‘thread injection and retirement algorithm’ has changed. In cases where there are more running threads than CPUs - instead of creating new threads at the rate of 1 thread per 0.5 second – the new thread pool takes the liberty to ‘hold back’ threads (i.e. reduce the concurrency level) for a while.

In addition, the maximum threads count is NOT being set to some arbitrary number (like 20-250) that is big enough to help preventing dead locks, instead it’s being set to the maximum that the machine can handle in the limitation of the process virtual address space.

Each thread consumes 1M of committed bytes. So applications which allow infinite number of parallel tasks will sooner or later run out of contiguous virtual address space. Thus, the thread pool doesn’t allow the introduction of a new thread that will be the cause of OutOfMemory exception.