Testing in Production (TiP) is the most important mind-shift required for building and operating a successful service at scale. This post outlines the benefits of Testing in Production, walks through the methodologies and explains the practices that can be applied to mitigate the associated risks.

Abstract

As your service grows and becomes more complex, it becomes increasingly difficult to mimic the production environment and predict how the service will be configured and used by users. For these reasons, extensive up-front testing becomes less and less effective of a tool to assess quality, and Testing in Production becomes a necessity.

How much testing should we do in production? Well, the better we get in mitigating the associated risks, the more we can and should do. Starting from building telemetry pipelines and running Synthetic Transactions to Load Testing and Fault Injection in production.

The extra load that we put on production and the habit of presenting users with less (up-front) tested code doesn’t come without a risk. Downtimes, Disappointed customers, SLA breaches, etc. Luckily, since we are building a cloud service, we can control the deployment, change configuration to turn on/off features, and push (or rollback) new bits whenever we want. Testing in Production methodologies include practices like Synthetic Transactions, Canary Deployment, Controlled Test Flight and Data Driven Quality that have been proven very useful in mitigating these risks.

Implementing these methodologies is not easy to say the least. To make this all work, the engineering team must own the production environment (DevOps). The service needs to be separated to small, independently deployable, versioned, backward compatible and patchable services (a monolithic codebase is discouraged). Finally, the development process must be efficient to support rapid yet quality releases.

Life Without TiP

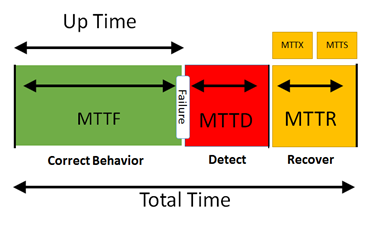

Engineering teams that don’t put their testing and engineering focus in production usually find that 1) it takes a lot of time until they detect failures in production (aka MTTD - Mean Time to Detect) and 2) that it takes a lot of time until they are able to develop a fix and deploy it with confidence (aka MTTR – Mean Time to Recover).

MTTF stands for Mean Time to Failure, which is the amount of time during which the service is up.

Since the formula for availability is: Up Time (MTTF) / Total Time (MTTF + MTTD + MTTR). It’s apparent that in order to maximize availability we need to either increase MTTF (i.e. aim for zero failures) or reduce MTTD and MTTR (combined, they are also referred to as MTTH - Mean Time to Heal).

A popular way to increase MTTF is by running tests in test labs (scale down version of the production environment). The problem with lab tests is that they require significant engineering investment. These tests tend to fail, most of the time on false positives (test bugs). It’s also difficult to predict how users will use the system thus automated test cases can only get us so far. Lastly, most production environment are very complex, it’s hard (if not impossible) to simulate the configuration, network, load balancers and other (3rd party) hardware and software that make up the production environment.

Since we can’t predict how users will use our service and we can’t mimic the production environment – we practically have no choice, we must Test in Production. However, we also must acknowledge the associated risks and come out with the right mitigations.

The Twist (Engineering Investment)

The first thing to change when shifting to TiP is increase the investment in dev testing, which includes unit testing and continues integration. These unit tests run in isolation and don’t require a lab. Since they run fast and execute more frequently – they help to improve the so called ‘dev inner loop’, which means that we can get faster feedback on the quality of a given change.

By applying TiP methodologies like Canary Deployment (covered in the next chapter) and with our improved dev inner loop: we can push small and targeted changes directly to the Pre-Production (Dogfood/Beta) environment, potentially bypassing the traditional up-front testing in the lab. The Pre-Production environment is deployed in our production data center. It is used by the engineering team and by internal users. The main tool to asses quality in this environment is Data Mining. In addition to the traffic generated by the real users, we can run Synthetic Tests (potentially re-use the end to end lab tests) to trigger new scenarios.

We can use the time that we saved to invest in getting more meaningful insights from the data that we collect. Insights that will allow us to asses quality and detect bugs that impact customers (the ones that actually need fixing). We can also invest more in synthetic tests, alerting, reducing noise, auto healing etc.

Methodologies

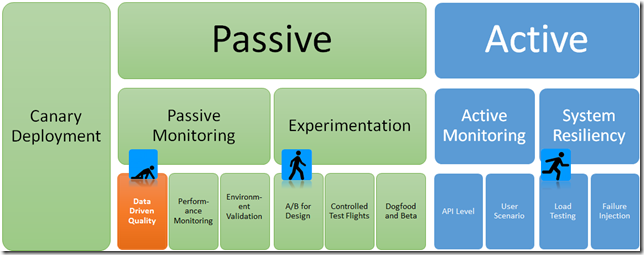

TiP Methodologies are divided to Passive and Active. The Passive methodologies include data mining, which is used to asses quality and measure performance. The active ones are obviously riskier, they include Synthetic Tests and Load Testing.

We separate the methodologies to Crawl, Walk and Run. Crawl methodologies are lower risk. Walk and Run methodologies are riskier and require the tools that we built while crawling. We can use this system to start small, pick up the low hanging fruits and as we get better, we can mitigate the risks associated with Walk and Run methodologies.

Data Driven Quality (Passive, Crawl)

The traditional way to asses quality is via manual testing or automated tests. Data driven quality is about crawling though the data that the service generates (logs, counters etc) in order to asses quality. To enable this, we need to instrument the code such that our service will produce meaningful data. Alan Page does a great job in explaining this mind shift.

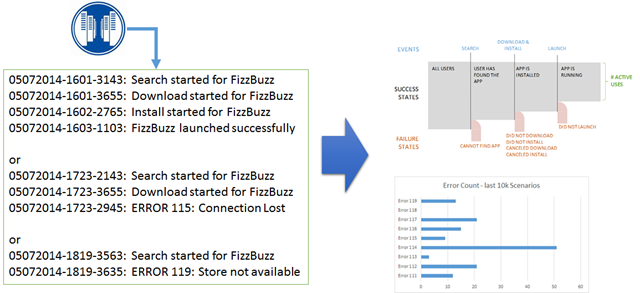

Here’s the example from Alan’s blog: Say that we are building an AppStore service. The service enables users to locate an application in the store, download and install the app. The traditional approach will be to use test automation to verify the happy path (can I find an app, download and install?), and test the failure cases by injecting failures into system. We will typically use this test automation to asses the quality of service in order to decide if we can go to production.

The Data Driven approach suggest to 1) instrument the code such that the service log all the important events including failure events, and 2) mine the log data that the service generates to asses quality.

Using the appropriate tools, we can analyze the data that’s coming from our preproduction and production environments to generate charts that look like this:

If we can ship new bits to our preproduction environment frequently enough (say every day), we can use the analysis results to decide if the bits are quality enough to go to production.

Canary Deployment

Canary deployment is about deploying new code to a small sub-set of the machines in production, verifying that the the new bits didn’t cause regression (functionality and performance), and slowly increasing the exposure of the bits to the rest of the machines in production.

By limiting the exposure to the new bits, we can minimize the impact of failures and can afford to release new bits with less up-front testing.

Controlled Test Flight

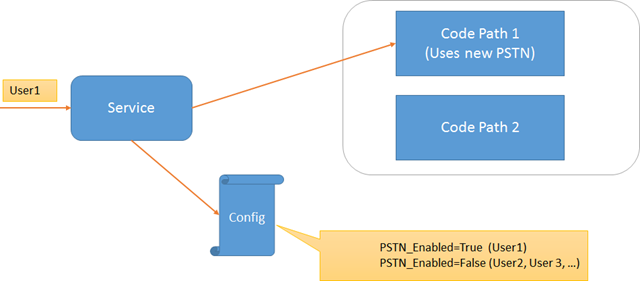

With Controlled Test Flight, we separate the the users to two or more groups, and assign a ‘flight’ to each group via configuration. When a user issue a request, the service may route the user to a different experience depending on the flight to which to user is assigned.

Controlled Test Flight can help to validate that a new code works well in production, without exposing all the users to the new code.

Controlled Test Flight is often used in combination with Canary Deployment. We use Canary Deployment to deploy new bits to sub-set of the machines, and we use Controlled Test Flight to route only test users to the new bits. We trigger the important scenario using the test users and verify that the new bits work as expected. If the new bits work as expected (the Canary didn’t die…), we release the bits to the rest of the machines and complete the experiment.

A/B Testing (Experimentation for Design)

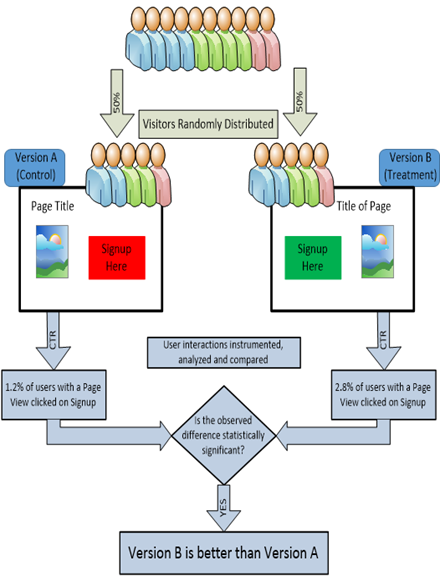

A/B Testing is very similar to Controlled Test Flight in the sense that in both we divide the users to groups and present each group with a different experience. The difference is in the intent. With Controlled Test Flight we try to verify that our new bits work as expected. Essentially we check if we built it right. With A/B Testing we experiment with multiple experiences in order to make sure that we build the right thing.

With A/B Testing we divide the users to two groups. We present each group with a different experience, analyze user interaction patterns for each experiment, and based on the results, we pick the best experience. Using this system we detect ineffective features and can cut our losses early, and we can detect successful features and increase the investment accordingly.

In the example bellow we try to calculate which user experience will yield more signups. We present 50% of the users with UI in which the Signup button is in the right hand side. The remaining 50% will see UI in which the Signup button is in the left hand side. We run the experiment for a week. We calculate the click rate for each UI and based on the results we choose the UI that yielded more clicks.

Synthetic Transaction Monitoring

Synthetic tests are automated tests running against the production instances. Synthetic tests can be divided to two groups. API Tests and User Scenario Tests.

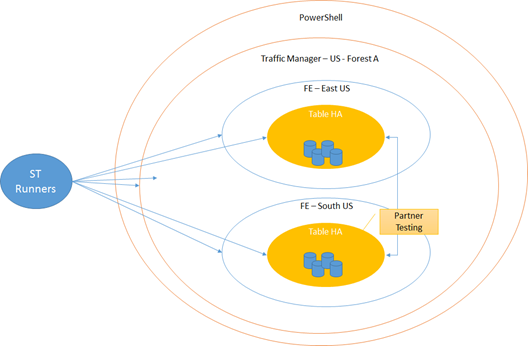

API Tests usually run against every component that expose public API. If the example bellow, the service can be used via PowerShell, that calls region wide API managed by Azure Traffic Manager. Traffic Manager will route the request to one of the FE services. The latter will use an Highly Available Table Store service.

Since each one of the layers expose public API, we will run Synthetic Tests against each API. This way, in case of a failure that surface in the upper layer, we will be able to peel the onion and find the faulty layer.

User Scenario Tests are about testing the service though the same interfaces used by the users. If the users interact with the service via Browser, we will run the tests via Browser, etc.

Even for mature services where the important scenarios are already triggered by real users, Synthetic Tests are useful for triggering new scenario that are not yet discoverable by real users. In addition, they keep the service busy when there’s low engagement form real users.