This post introduces the MVP-VM (Model View Presenter – Model View) design pattern, which is the windows forms (winforms) equivalent of WPF/Silverlight MVVM. The MVP-VM pattern is best suited to winforms applications that require full testing coverage and use data binding extensively for syncing the presentation with the domain model.

Evolution

Before we start digging deep into MVP-VM, lets have a quick review of the patterns from which it has evolved.

Presentation Model

Martin Fowler introduced the Presentation Model pattern as a way of separating presentation behavior from the user interface, mainly to promote unit testing. With Presentation Model every View has Presentation Model that encapsulates its presentation behavior (such as how to handle buttonXXX click) and state (whether a check box is checked/unchecked).

Whenever the View changes it informs its Presentation Model about the change, in response the Presentation Model changes the Model as appropriate, reads new data from the Model and populates its internal view state. In turn, the View updates the screen according to the Presentation Model updated view state.

The downside of this pattern it that a lot of tedious code is required in order to keep the Presentation Model and the View synchronized. A way to avoid writing the synchronization code is to bind the Presentation Model properties to the appropriate widgets on the View such that changes made to the Model will automatically reflect on the View, and changes made by the user will automatically flow from the View, through the Presentation Model to the underlying Model object.

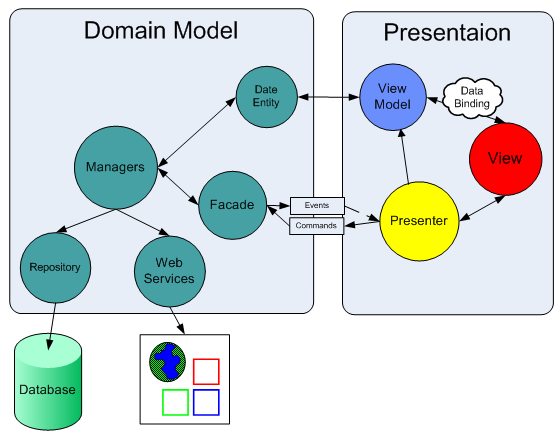

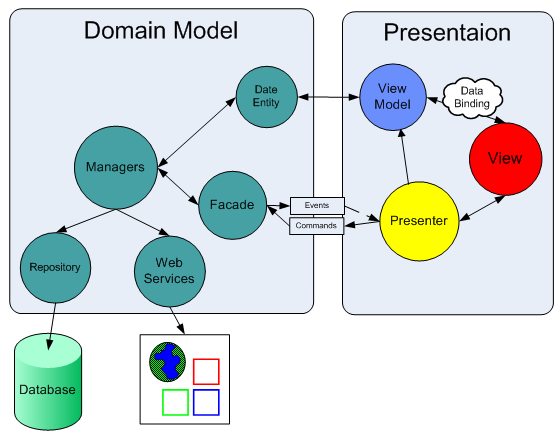

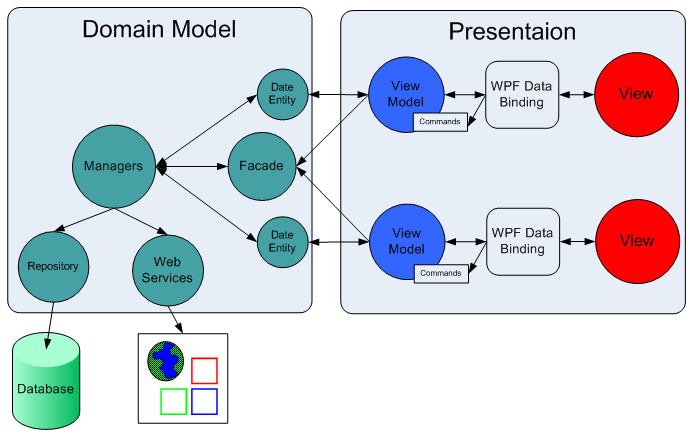

MVVM (Model View View Model) for WPF

MVVM (Model View View Model) introduces an approach for separating the presentation from the data in environments that empower data binding such as WPF and Silverlight (see Developing Silverlight 4.0 Three Tiers App with MVVM). As you can see in the picture bellow, MVVM is almost identical to the Presentation Model pattern, just instead of 'Presentation Model' – we have 'View Model' and the two way data binding happens automatically with the help of WPF/Silverlight runtime (read more).

With WPF, the bindings between View and View Model are simple to construct because each View Model object is set as the DataContext of its pair View. If property value in the View Model changes, the change automatically propagate to the View via data binding. When the user clicks a button in the View, a command on the View Model executes to perform the requested action. The View Model, never the View, performs all modifications made to the Model data.

MVP-VM (Model View Presenter - View Model)

Starting from .NET framework 2.0, Visual Studio designer supports binding objects to user controls at design time which greatly simplifies and motivates the use of data binding in winforms applications. Even when designing simple UI without the use of any fancy pattern – it often makes sense to create View Model class that represent the View display (property for every widget) and bind it to the View at design time. You can read all about it in ‘Data Binding of Business Objects in Visual Studio .NET 2005/8’.

When creating .NET winforms application that consist of many Views that present complex domain model and includes complex presentation logic - it’s often makes sense to separate the Views from the domain model using the Model View Presenter pattern. One can use Supervising Controller or Passive View depending on the required testing coverage and the need for data binding.

With Supervising Controller data binding is simple but presentation logic cannot be fully tested since the Views (that are usually being mocked) are in charge of retrieving data from the Model. With Passive View the thin Views allow full testing coverage and the fact that the Presenter is in charge of the entire workflow greatly simplify testing. However, direct binding between the Model and the View is discouraged. For more details please refer to ‘Model View Presenter Design Pattern with .NET Winforms’.

MVP-VM is about combining the two patterns so we wont have to give up on Data Binding nor cut down on testability. This is achieved by adapting the Passive View pattern while allowing an indirect link between the Model and the View.

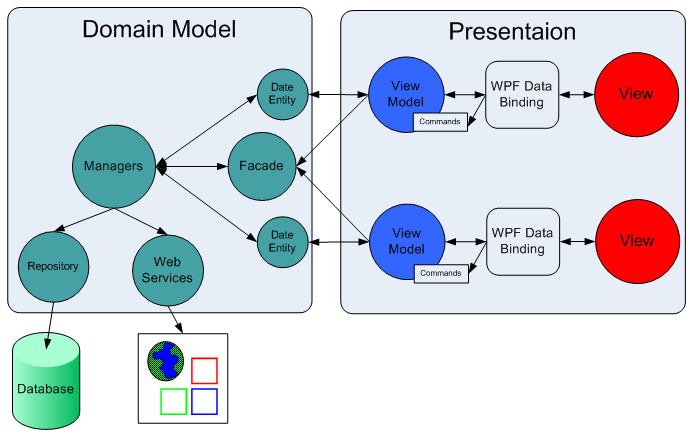

MVP-VM Overview

The View is in charge of presenting the data and processing user inputs. It is tightly coupled to the Presenter so when user input is triggered (a button has been clicked) it can directly call the appropriate method on the Presenter. It’s widgets are bound to the matching View Model properties such that when a property of the View Model changes – the linked widget is being changed as a result, and when the widget value changes – the View Model property is being changed as a result.

The View Model exposes properties that are bound to its matching View widgets. Some of its properties are linked directly to the Model object such that any change made to the Model object automatically translate to change on the View Model and as a result appear on the View and vise versa, and some of its properties reflect View state that is not related to Model data, e.g whether buttonXXX is enabled. In some cases the View Model is merely a snapshot of the Model object state so it exposes read-only properties. In this case the attached widgets cannot be updated by the user.

The Presenter is in charge of presentation logic. It creates the View Model object and assign it with the appropriate Model object/s and bind it to the View. When its being informed that a user input has been triggered it executes according to application rules e.g. command the Model to change as appropriate, make the appropriate changes on the View Model etc. It is synchronized with the Model via Observer-Synchronization so it can react to changes in the Model according to application rules. In cases were it’s more appropriate for the Presenter to change the View directly rather than though its View Model, the presenter can interact with the View though its interface.

The Model is a bunch of business objects that can include data and behaviors such as querying and updating the DB and interacting with external services. Such objects that only contain data are referred to as ‘data entities’.

How does it Work?

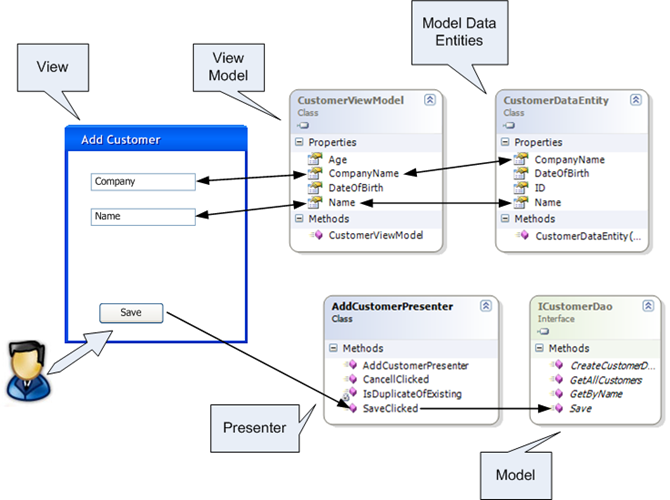

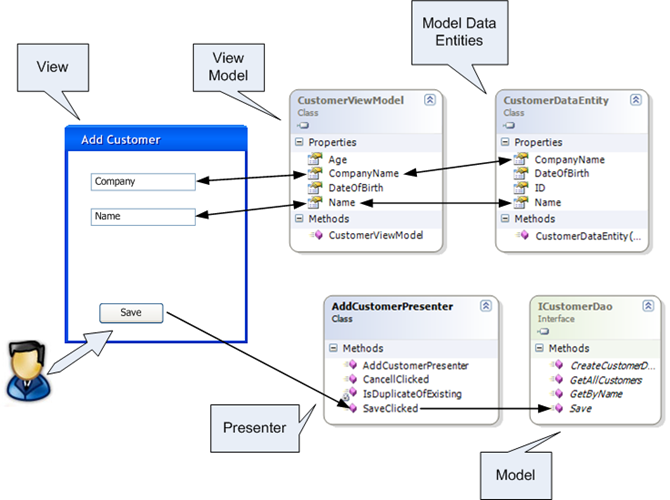

As you can see in the figure above, each UI widget is bound to a matching property on the ‘customer view model’, and each property of the ‘customer view model’ is linked to a matching property on the ‘customer data entity’. So for example, when the user changes the value of the ‘Name’ textbox – the ‘Name’ property of the ‘customer view model’ is automatically updated via data binding, which causes the update on the ‘Name’ property of the ‘customer data entity’. In the other direction, when the ‘customer data entity’ changes – the changes reflect on the ‘customer data model’ which causes the appropriate widgets on the view to change via data binding.

When the user clicks on the ‘Save’ button, the view responds and calls the appropriate method on the presenter, which responds according to application logic, in this case - it calls the ‘Save’ method of the ‘customer dao’ object.

In cases where the ‘application logic’ is more sophisticated, the presenter may bypass the ‘view model’ and make direct changes on the view through its abstraction. In some cases ‘view model’ property can be linked to view widget at one side – but not linked to model object at the other side, in such cases the ‘view model’ will be prompt to change by the presenter, which will result in the appropriate change on the view widget.

Case Study – MVP-VM

In the following case study the MVP-VM pattern is used to separate the concerns of a simple application that present list of customers and allows adding a new customer. We’ll focus on the ‘Add Customer’ screen.

Class Diagram – Add New Customer

The AddCustomerPresenter holds references to AddCustomerViewModel, AddCustomerView and CusomerDao (model). It references the AddCustomerViewModel and the AddCustomerView so it can establish data binding between the two, and it references the CussomerDao so it can change it and register to its events.

The AddCustomerView holds reference to the AddCustomerPresenter so it can call the ‘SaveClicked’ method when the ‘Save’ button is clicked.

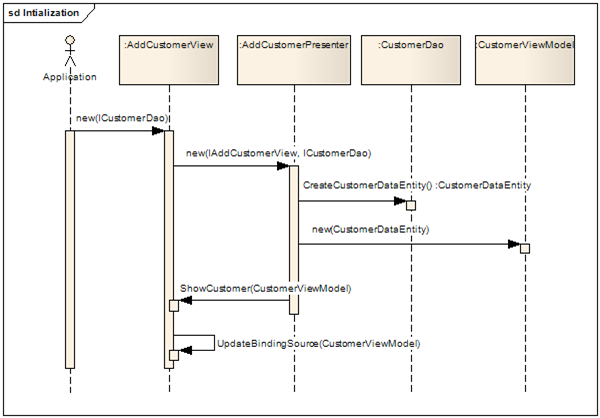

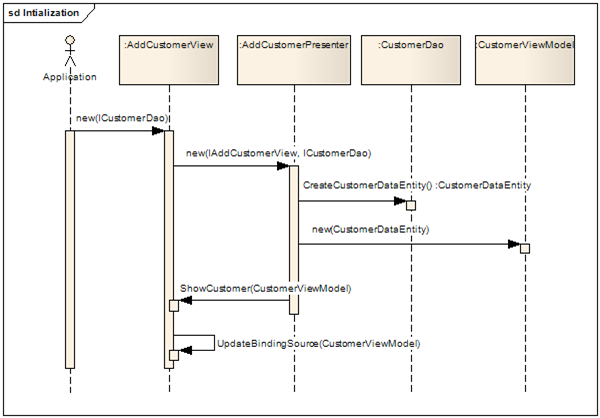

Sequence Diagram - Initialization

The AddCustomerView is instantiated by some class in the application and injected with instance of the CusomerDao (model), it instantiate the AddCustomerPresenter and injects it with the CusomerDao and with itself. The AddCustomerPresenter prompts the CusomerDao to create a new CustomerDataEntity, instantiate the AddCustomerViewModel injecting it with the newly created CustomerDataEntity, and calls ‘ShowCustomer’ on the AddCustomerView in order to data bind it to the AddCustomerViewModel.

Sequence Diagram - Saving New Customer

The AddCustomerView responds to click on the ‘Save’ button and calls the appropriate method on the AddCustomerPresenter. The AddCustomerPresenter calls ‘ReadUserInput’ on the AddCustomerView which in response alerts its internal ‘binding source’ to reset binding, which causes the content of its widgets to reread into the AddCustomerViewModel (read more about data binding of business objects). The AddCustomerPresenter than evaluates the CustomerDataEntity (which was updated automatically since it’s linked to AddCustomerViewModel) and checks whether the new customer already exist in the data storage. In case there are no duplications it commands the CusomerDao (model) to save the customer.

Here’s the code:

View

1: public partial class AddCustomerView : Form, IAddCustomerView

2: { 3: private AddCustomerPresenter m_presenter;

4:

5: public AddCustomerView(ICustomerDao dao)

6: { 7: InitializeComponent();

8:

9: m_presenter = new AddCustomerPresenter(this, dao);

10: }

11:

12: public void ShowCustomer(CustomerViewModel customerViewModel)

13: { 14: cusomerViewModelBindingSource.DataSource = customerViewModel;

15: }

16:

17: public void ReadUserInput()

18: { 19: cusomerViewModelBindingSource.EndEdit();

20: }

21:

22: public void ShowError(string message)

23: { 24: MessageBox.Show(message, "Error", MessageBoxButtons.OK, MessageBoxIcon.Information);

25: }

26:

27: private void m_btnSave_Click(object sender, EventArgs e)

28: { 29: m_presenter.SaveClicked();

30: }

31:

32: private void m_btnCancel_Click(object sender, EventArgs e)

33: { 34: m_presenter.CancellClicked();

35: }

36: }

Presenter

1: public class AddCustomerPresenter

2: { 3: private IAddCustomerView m_view;

4: private ICustomerDao m_customerDao;

5: private CustomerViewModel m_viewModel;

6:

7: public AddCustomerPresenter(IAddCustomerView view, ICustomerDao customerDao)

8: { 9: m_view = view;

10: m_customerDao = customerDao;

11:

12: // Create the data entitry

13: CustomerDataEntity customerDataEntity = customerDao.CreateCustomerDataEntity();

14: CustomerViewModel customerViewModel = new CustomerViewModel(customerDataEntity);

15:

16: m_viewModel = customerViewModel;

17:

18: // Bind the ViewModel to the VIew

19: m_view.ShowCustomer(customerViewModel);

20: }

21:

22: public void SaveClicked()

23: { 24: m_view.ReadUserInput();

25:

26: CustomerDataEntity customerDataEntity = m_viewModel.CustomerDataEntity;

27: bool duplicateExist = !IsDuplicateOfExisting(customerDataEntity);

28: if (duplicateExist)

29: { 30: m_customerDao.Save(customerDataEntity);

31:

32: m_view.Close();

33: }

34: else

35: { 36: m_view.ShowError(string.Format("Customer '{0}' already exist", m_viewModel.Name)); 37: }

38: }

39:

40: private bool IsDuplicateOfExisting(CustomerDataEntity newCustomerDataEntity)

41: { 42: CustomerDataEntity duplicateCustomerDataEntity =

43: m_customerDao.GetByName(newCustomerDataEntity.Name);

44:

45: return duplicateCustomerDataEntity != null;

46: }

47:

48: public void CancellClicked()

49: { 50: m_view.Close();

51: }

52: }

ViewModel

1: public class CustomerViewModel

2: { 3: private readonly CustomerDataEntity m_customerDataEntity;

4:

5: public CustomerViewModel(CustomerDataEntity customerDataEntity)

6: { 7: m_customerDataEntity = customerDataEntity;

8: }

9:

10: public string Name

11: { 12: get { return m_customerDataEntity.Name; } 13: set { m_customerDataEntity.Name = value; } 14: }

15:

16: public string CompanyName

17: { 18: get { return m_customerDataEntity.CompanyName; } 19: set { m_customerDataEntity.CompanyName = value; } 20: }

21:

22: public DateTime DateOfBirth

23: { 24: get { return m_customerDataEntity.DateOfBirth; } 25: set { m_customerDataEntity.DateOfBirth = value; } 26: }

27:

28: public int Age

29: { 30: get

31: { 32: int age = DateTime.Now.Year - m_customerDataEntity.DateOfBirth.Year;

33:

34: return age;

35: }

36: }

37:

38: public CustomerDataEntity CustomerDataEntity

39: { 40: get { return m_customerDataEntity; } 41: }

42: }

Download

The case study can be downloaded from here or here