In recent years, microprocessors designers are hitting the walls of memory latency, heat dissipation, and number of transistors for single core processors. As a result, the microprocessors industry consistently introducing more cores on the same chip in order to keep on providing stronger processors and maintain Moore's law. Consequentially, applications' parallelism become more fine-grained, which translates to one application having hundreds or even thousands of threads working concurrently.

While concurrent programming popularity increases, Herb Sutter makes the case that we need to have a consistent mental model for reasoning about concurrency. According to the new model, concurrency requirements and techniques are divided into the 3 categories (pillars).

Pillar 1 – Responsiveness and Isolation

Pillar 1 is about running time-consuming operations (such as downloads and db transactions) on a separate, dedicated background threads in order to keep time sensitive threads (such as the UI thread) responsive, while letting the background threads communicate via asynchronous messages.

For this Pillar, it doesn’t matter how many cores available in the machine, we care about responsiveness even when we target a single core machine. However, since with this Pillar some work is being done in parallel, we might see an increase in performances and make better use of the cores in the machine purely as a side effect.

Background Worker

When working with .NET, the BackgroundWorker abstraction can be used to execute the background work asynchronously.

We simply drag the BackgroundWorker component onto the form, add an event handler for the DoWork event, and call the time-consuming operation in this event handler. We call RunWorkerAsync to start the operation and CancelAsync to cancel the operation. While the operation makes progress, we can call ReportProgress to send progress updates notifications .

To receive progress updates and operation completed notifications, we handle the ProgressChanged and RunWorkerCompleted events. The nice thing about the BackgroundWorker is that the ProgressChanged and the RunWorkerCompleted handlers are being executed from the context of the UI thread.

Pillar 2 – Throughput and Scalability

Pillar 2 is about scaling to increasing number of cores and maintaining high throughout, mainly by introducing more and more parallelism.

Perhaps the biggest challenges associated with scalability is finding the most efficient way to control the concurrency of the application. Practically, we need to figure out how to divide the work and distribute it among the threads in a way that put the maximum amount of cores to work with minimum contentions.

Fortunately, we are not alone in this battle, frameworks such as OpenMP, TPL and Parallel-Studio can help us with producing scalable applications that will execute faster and faster on future machines having more and more cores (re-enable the free lunch).

Thread v.s. ThreadPool

The first problem that we face when executing asynchronous work is that the cost of threads creation can significantly hurt performance. Luckily, the thread-pool solves that by maintaining heuristic "pool" of threads that are just waiting for work items, when they are done executing a work item, they go back to the pool and wait for the next one, etc.

The thread pool employ the use of a ‘thread injection and retirement algorithm’ which optimizes the creation and destruction of threads by looking at the number of running work items and the number of cores available in the machine.

ThreadPool v.s. TPL (Task Parallel Library)

Before the introduction of .NET 4.0, in order to produce scalable programs, we didn’t had much of a choice but to use the thread pool directly, or to create our own threads factory.

The thing with working directly with the thread pool is that as long as there’re available threads in the pool (which is usually most of the time) – it executes work items immediately. With that approach, if we wont control the amount of work items that we pass over to thread pool, we can get pretty fast into a point where there’re to many threads executing work at once resulting in a great amount of context switches, or in the other extreme, to a point where work items execution is delayed for very long periods.

At the time, scalability wasn’t much of a concern so dealing directly with the thread pool worked out just fine. However, with the many core revolution/evolution knocking on our doors, there’s a need for a better solution. Practically, we need another ‘black box’ between the application and thread pool that will control the amount of work items delivered to thread pool at a given time, in most cases according to the machine architecture, workload and CPU utilization.

In.NET 4.0, Microsoft introduced a new library called TPL (Task Parallel Library) that plays the role of the ‘back box’ mentioned above. TPL executes the asynchronous work (tasks) in a way that fit the number of available cores, and provides developers with more control over the way in which that tasks get scheduled and executed.

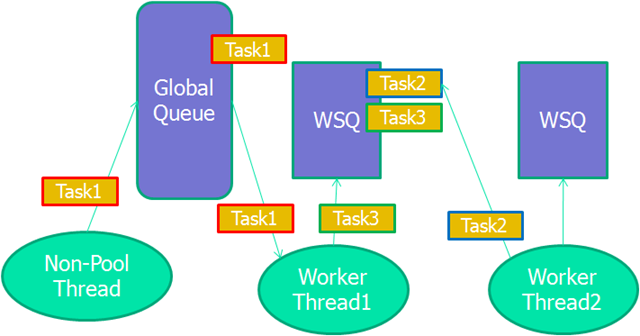

With TPL, developers separate the work into small pieces (tasks) and let the runtime execute them in a scalable fashion, naturally, via the thread pool. Tasks that submitted for execution from the context of non-TPLs thread (such as the UI thread) are being scheduled to the thread pool global queue. On the other hand, tasks that are submitted from the context of another task (their parent task), are stored in a separate queue owned by the worker thread of their parent task, and executed by the same worker thread in a LIFO fashion. When a worker thread is done executing its tasks (parents and childes), it looks for work in the global queue, if there isn't any, it tries to steal work from the local queues of its fellow threads.

The worker threads local queues are implemented as work stealing queues (WSQ). WSQ has two ends, it allows lock-free pushes and pops from one end (“private”), but requires synchronization from the other end (“public”). A worker thread pushes child tasks to the private end, and pop tasks from the private end (in a LIFO fashion) avoiding all locking and achieving great caching behavior. When the worker thread requires to steal, it grabs tasks from the public end where synchronization is required.

Pillar 3 - Consistency

With the addition of concurrency comes the responsibility for ensuring safety. When running multiple threads that access the same memory, we need to make sure that there's no corruption by applying the appropriate synchronization, and that while synchronizing there's no possibility for a deadlock.

A corruption occurs when one or more threads are writing a piece of data while one or more threads are also reading that piece of data. To avoid corruption, we use different kind of locks and different locking schemes to protect the data from concurrent access. Some locks grant exclusive access to only one thread, others grant shared access to readers and exclusive access to writers, there’s the immutability approach where objects are designed as read-only and cloned on every write, thus no lock is required on read, and the list goes on and on.

Sadly, in this battle we are alone, and there by there's a fundamental set of concepts that we need to learn and become comfortable with in order to be able to avoid the many hazards associated with lock based programming. The most common kind of hazard is that we simply forget to take a lock when it's required, which can lead to data races, resulting the generation of incorrect results. Less common hazard is a Deadlock, which can arise when multiple lock are being acquired in the wrong order. When there are more threads waiting at a lock than can be serviced, a lock convoy can arise, which means that a continuously growing backup of requests will remained unhandled and eventually begin timing out.

In order to reduce the risk of using lock, it's recommended to use 'out of the box' lock free data structure, or take some ideas from functional languages and instead of sharing data, create new clones of the data on every write and allow lock free reads, in F#, the compiler does the cloning for us, it looks like this:

Races, deadlocks and convoys aside, perhaps the most fundamental objection associated with locks is that they do not compose, i.e. abstractions that expose thread safe operations may produce correct results when used independently, but expose invalid intermediate state when combined. A classic example for the composition problem is when we need to implement an activity that transfer money from one account object to another while the intermediate state, when non of the accounts contains the money, must not be visible to other threads. The fact is that we can’t implement this feature without introducing some kind of a global lock that will surround the transfer, and will have to be used when ever threads access the accounts.

Transactional Memory

In an attempt to provide a solution for data sharing that is easy to use and less error prone than fine grained locks – researchers are working on a transactional memory solution (STM). With STM, instead of using locks to synchronize access to shared objects, we simply wrap all the code that access those objects in a transaction and let the runtime execute it atomically and in isolation by doing the appropriate synchronization behind the scenes.

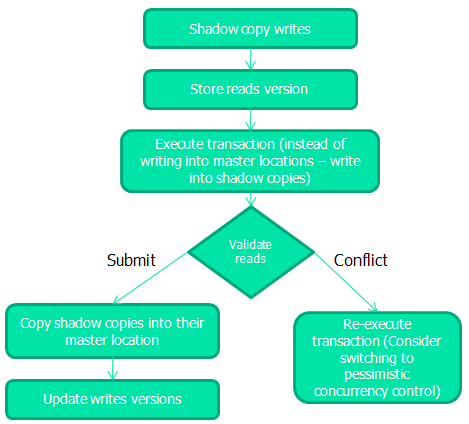

STM generates a single lock illusion and still maintain great scalability by utilizing an optimistic concurrency control technique, by which instead of acquiring a lock pessimistically letting only one thread enter the atomic region at a time, the runtime assumes that there is no contention and allows all the threads to execute atomic regions concurrently, it detects if the assumption is incorrect (transaction conflict) whenever a thread exists an atomic region, and if that’s the case, it rolls back changes that were made in the region and re-execute.

As illustrated in the diagram above, STM copies the objects that are being written into to shadow copies, stores the versions of the objects that are being read, and execute the transaction while instead of writing into the objects master location – it writes into the shadow copies, at the end of the transaction, it compare the versions of the reads with their current version to see if any of them has changed by other transaction, if that’s the case, there’s a conflict thus it re-executes the transaction (no need to rollback since changes were made on the shadow copies), other wise it submits the transaction by copying the shadow copies into their master location and updating the reads versions (under the protection of fine grained locks).